The system is based on human sketches, but doesn’t imitate the human doodles exactly, Google Research says, instead creating its own new drawings. While the system still starts with a sketch, the program reconstructs the original drawing in a unique way. That’s because the team deliberately added noise between the encoder and decoder, so that the computer can’t recall the exact sketch.

The goal isn’t to teach computers to copy a drawing, but to see if neural networks are in fact capable of creating their own drawings. To do that, Google researchers David Ha and Douglas Eck used the process of creating 70,000 drawings to teach the system using the motor sequences, like direction and when to lift the pen, instead of simply inputting thousands of already completed drawings.

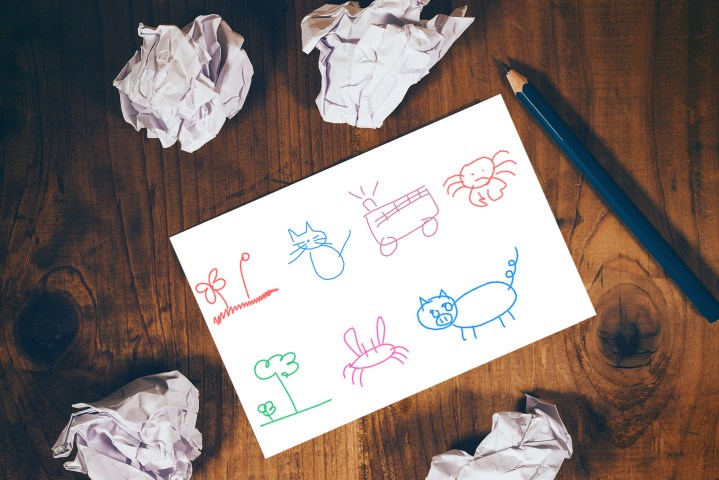

While the system’s cat drawings don’t look much better than a preschooler’s, sketch RNN is capable of creating a unique sketch. To test the program’s ability to draw a unique cat, the team also feeds it data of odd drawings, like a three-eyed cat. “When we feed in a sketch of a three-eyed cat, the model generates a similar looking cat that has two eyes instead, suggesting that our model has learned that cats usually only have two eyes,” Ha wrote.

If the input sketch is actually of a different object but still paired with the word cat, the program still creates a cat, though the overall shape mimics the original drawing. For example, when the researchers drew a truck and told the computer it was a pig, they got a truck-shaped pig.

So what’s the real world application? Google Research says the program can help designers quickly generate a large number of unique sketches. Eventually, the program could also be used to teach drawing, learn more about the way humans sketch, or to finish incomplete drawings.