AMD’s Radeon graphics business has been sitting firmly in second place for a number of years and despite a recent resurgence in fortunes and performance, Nvidia retains a firm hold on graphics card mindshare and market dominance. But AMD hasn’t always been the underdog. In fact, there have been several instances over the years where it stole pole position and captured the hearts and minds of gamers with a truly unique GPU release.

Here are some of the best AMD (and ATI) graphics cards of all time, and what made them so special.

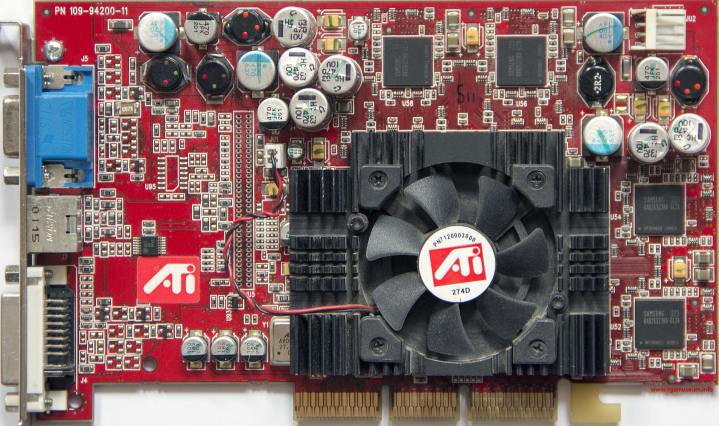

Radeon 9700 Pro

Radeon makes a name for itself

The Radeon 9700 Pro is technically not an AMD card, but rather an ATI card. AMD only obtained GPU technology by acquiring ATI in 2006, and in doing so inherited ATI’s rivalry with Nvidia. But not talking about ATI would be a mistake, because although Nvidia largely dominated the GPU scene in the pre-AMD era, there was one moment where everything aligned in just the right way for ATI to make its mark.

Nvidia had established itself as the leading GPU manufacturer after it launched the famous GeForce 256 in 1999, and in the following years, ATI struggled to compete with its first generation Radeon GPUs: the 7000 series. The 8000 series shrunk the gap between Radeon and GeForce but didn’t close it. More than that, reviewers at the time felt the flagship Radeon 8500 came out way too soon and had very poor driver support.

However, in the background ATI was working on the R300 architecture that would power the 9000 series, and ended up creating a GPU larger than anything that came before it. At a die size of over 200 mm2 and with over 110 million transistors, at the time it was absolutely gargantuan. For comparison, Nvidia’s GeForce 4 GPU capped out at 63 million transistors.

When the Radeon 9700 Pro launched in 2002, it blew Nvidia’s flagship GeForce 4 Ti 4600 out of the water, with the 9700 Pro sometimes being over twice as fast. Even though the Ti 4600 was actually about $100 cheaper than the 9700 Pro, Anandtech recommended the Radeon GPU in its review. It was just that fast.

In fact, all across the stack the 9000 series did quite well, partly due to the fact that Nvidia’s newer GeForce FX series struggled to compete. The top-end 9800 XT’s high performance was well received (though it was criticized for being expensive) and the mid-range 9600 XT also received accolades from websites such as The Tech Report, which declared “it would almost be irresponsible for me to not give the Radeon 9600 XT our coveted Editor’s Choice award.” However, this success wasn’t to last very long.

Radeon HD 4870

Small but powerful

After the 9000 series, ATI gradually lost more and more ground to Nvidia, especially after the legendary GeForce 8800 GTX launched in late 2006 to critical acclaim. In fact, the launch of the GeForce 8 series was described as “9700 Pro-like” by Anandtech, which not only cemented the 9000 series’s reputation but also demonstrated how far ahead Nvidia was with the 8 series.

By this time, AMD had acquired ATI, and using their combined forces, the two companies tried to work out a successful strategy for competing with Nvidia. Ever since the 9700 Pro, winning was always about launching the biggest GPU. A 200 mm2 die was big in 2002, but the top-end GeForce 8 series GPU in 2006 was almost 500 mm2, and even today that’s a pretty big GPU. The problem for AMD and ATI was that producing big GPUs was expensive, and there simply wasn’t enough money to fund another big GPU.

What AMD and ATI decided to do next was called the small die strategy. Instead of making really big GPUs and trying to win with raw power, AMD wanted to focus on high density, high performance GPUs with small die sizes (200-300 mm2) that were almost as fast as Nvidia’s flagship GPUs. This way, AMD could sell their GPUs at an extremely low price and, hopefully, roll right over Nvidia despite not having a halo product.

The first small die strategy GPU was the HD 4000 series, which launched in 2008 with the HD 4870 and 4850. Up against Nvidia’s GTX 200 series, AMD’s small die strategy was a big success. The HD 4870 was praised for beating the GTX 260 at $100 less, with Anandtech describing the GPU as “in a performance class beyond its price.” The HD 4870 was also nipping at the GTX 280’s heels, despite the 280 being over twice as large.

AMD hadn’t abandoned the high end, however, and wanted to leverage its multi-GPU technology, CrossFire, to make up for the lack of big Radeon GPUs. Though, not every reviewer believed this was a good strategy, with The Tech Report calling it “hogwash” at the time.

Ultimately, that quote was proved correct, as large, monolithic GPUs weren’t going anywhere any time soon.

Radeon HD 5970

The GPU that was too fast

Nevertheless, AMD was not deterred from its small-die strategy and continued on with the HD 5000 series. Nvidia was struggling to get its next-generation GPUs out the door, which meant the aging GTX 285 (a refreshed GTX 280) had to compete against the brand-new Radeon GPUs. Unsurprisingly, the HD 5870 trounced the 285, putting AMD back in the lead with a single GPU card for the first time since the 9800XT.

As multi-GPU setups were crucial for the small die strategy, AMD also launched the HD 5970, a graphics card with two GPUs running in CrossFire. The 5970 was so fast that multiple publications said it was too fast to actually matter, with Anandtech describing the phenomenon as “GPUs are outpacing games.” The Tech Report found the 5970 to be a niche product for this reason, but nevertheless called it the “obvious winner” and didn’t complain about CrossFire either.

For six whole months, AMD ruled the GPU market and had a massive lead against Nvidia’s 200 series in performance and efficiency. In early 2010, Nvidia finally launched its brand-new GTX 400 series based on the Fermi architecture, which at the top end especially, was power hungry, hot, and loud. It was barely any faster than the HD 5870, and well behind the HD 5970. Two 480s in SLI could beat the 5970, but at nearly double the power consumption such a GPU configuration was ludicrous. The 480 was so hot in testing that Anandtech was worried in regular usage a 480 could die prematurely.

The HD 5000 series was the high watermark for AMD when it came to discrete GPU market share, with AMD coming just short of overtaking Nvidia in 2010. However, in overall graphics (including stuff like integrated graphics and embedded graphics), AMD enjoyed higher market shares from 2011 to 2014. Although Nvidia had been beaten badly by HD 5000, it wouldn’t be too long before the tables were turned.

Radeon HD 7970

Breaking the GHz barrier

Although Nvidia’s 400 series was quite bad, the company managed to improve upon it with the 500 series, which launched in late 2010. The newer and better GTX 580 was faster and more efficient than the GTX 480, and it creeped up on the HD 5970. Around the same time, AMD also launched its next-generation HD 6000 GPUs, but the top end HD 6970 (which was a single GPU card, not dual GPU) didn’t blow away reviewers with either its performance or price.

To make matters worse, Nvidia would be moving to the newest 28nm process from TSMC with its next-generation cards, and this was a problem for AMD because the company had always been ahead when it came to nodes. In order to get the most out of the 28nm node, AMD retired the old Terascale architecture that had powered Radeon since HD 4000 and introduced the new Graphics Core Next (or GCN) architecture, which was designed for both gaming and regular computing. At the time AMD thought it could save money by using one design for both.

The HD 7000 series launched with the HD 7970 in early 2012, and it beat the GTX 580 pretty conclusively. However, it was more expensive compared to the HD 4000 and 5000 series. Anandtech noted that while AMD had made “great technological progress” in recent years, the one that actually made money was Nvidia, which was largely why AMD hadn’t priced the HD 7970 so aggressively like older Radeon GPUs.

But the story doesn’t stop there. Just two months later, Nvidia launched its new 600 series, and it was very bad … for AMD. The GTX 680 beat the HD 7970 not just in performance, but also efficiency, which had been a key strength of Radeon GPUs ever since the HD 4000 series. To add insult to injury, the 680 was actually smaller than the 7970 at around 300 mm2 to the 7970’s 350 mm2.

All thanks to Nvidia using the same 28nm node the 7970 used.

That said, the 7970 wasn’t much slower than the 680, and since the 7970 was never going to be as efficient as the 680 anyways, AMD decided it would launch the 7970 again, but with much higher clock speeds, as the HD 7970 GHz Edition. It was the world’s first GPU that ran at 1 GHz out of the box, and it tied the score with the GTX 680. The 7970 wasn’t a 4870 or a 5970, but its back and forth battle with the GTX 680 was impressive at the time.

There was just one problem: it was hot and loud, “too loud” according to Anandtech. Nvidia had also launched a hot and loud GPU just a couple of years before, and fixed it by moving to the next node. AMD could just do the same thing, right?

Radeon R9 290X

Victory, but at what cost?

As it turns out, no, AMD could not just move to the next node, because almost every single foundry in the world ran into a brick wall around the 28nm mark, which has been recognized as the beginning of the end of Moore’s Law. While TSMC and other fabs continued making theoretically better nodes, AMD and Nvidia stuck with the 28nm node because these newer nodes weren’t really much better and weren’t suitable for GPUs. This was an existential problem for AMD, because the company had always been able to rely on moving to the newer node to remain ahead of Nvidia when it came to efficiency.

Still, AMD had some ways out. The HD 7970 was only around 350 mm2, and the company could always make a bigger GPU with more cores and a bigger memory bus. AMD could also improve GCN, but that was difficult because GCN was doing double duty as both a gaming and a compute architecture. Finally, AMD could always launch its next GPUs at lower prices.

Nvidia had already beaten AMD to the next generation in mid 2013 with its new GeForce 700 series, led by the GTX 780 and the GTX Titan, which were much faster (and more expensive) than the HD 7970 GHz Edition. But launching second wasn’t bad for AMD, since it gave it a chance to choose the right price for its upcoming 200 series, which launched in late 2013 with the R9 290X.

The 290X was almost the perfect GPU. It beat the 780 and the Titan in almost every game while being much cheaper at $549 to the 780’s $649 and the Titan’s $999. The 290X was a “price/performance monster.” It was the fastest GPU in the world. There was just one slight problem with the 290X, and it was the same problem the HD 7970 GHz Edition had: It was hot and loud.

A large part of the problem was that the R9 290X had reused the cooler on the reference HD 7970, and since the 290X used more power, the GPU ran at a higher temperature (up to a blazing 95 degrees C) and its single blower fan had to spin even faster. AMD had pushed the envelope just a bit too much, and it was basically a replication of Fermi and the GTX 480. Despite the greatness of the 290X, it was the first of many hot and loud AMD GPUs.

Radeon RX 480

A new hope

When the RX 480 launched in mid 2016, it had been nearly three years since the 290X had claimed the performance crown. Those three years were some of the toughest for AMD as everything seemed to go wrong for the company. On the CPU side, AMD had delivered the infamously poor Bulldozer architecture, and on the GPU side AMD had launched the R9 390X in 2015, which was just a refreshed 290X. The Fury lineup wasn’t great either and couldn’t keep up with Nvidia’s GTX 900 series. It really looked like AMD might even go bankrupt.

Then there was hope. AMD restructured itself in 2015 and created the Radeon Technologies Group, led by veteran engineer Raja Koduri. RTG’s first product was the RX 480, a GPU based on the Polaris architecture which was aimed purely at the midrange, a throwback to the small die strategy. The 480 was no longer on the old TSMC 28nm process but GlobalFoundries’s 14nm process, which was a much-needed improvement.

At $200 for the 4GB model, the 480 was received very positively by reviewers. It not only beat the midrange GTX 960 (which to be fair was over a year old) but also previous generation AMD GPUs that had been way more expensive. It tied GPUs like the R9 290X, the R9 390X, and the GTX 970. It wasn’t power hungry either, thankfully. In our review, we simply said “AMD’s Radeon RX 480 is awesome.”

Unfortunately for the 480, the very same month Nvidia launched the brand-new GTX 1060, and for the first time in years Nvidia was on a superior node: TSMC’s 16nm. The GTX 1060 was quite a bit better than the 480 and started at $250, the same price as the 480 8GB. To make things worse, the RX 480 consumed quite a bit more power than the GTX 1060 and also launched with a bug that caused the 480 to draw too much power over the PCIe slot.

But surprisingly, that didn’t kill the 480 or its slower but much cheaper counterpart the RX 470. In fact, it went on to become one of AMD’s most popular GPUs of all time. There are many reasons why this happened but the primary ones are pricing and drivers. The RX 480 for pretty much all of its life sold at a very low price, first at the $200-250 range but into 2017 even the AIB models with 8GB of VRAM could be found for less than $200. The RX 470 was even cheaper, sometimes going for just over $100. The performance of these GPUs also gradually improved with better drivers and increasing adoption of DX12 and Vulkan; the so-called AMD “Fine Wine” effect.

AMD went on to refresh the 480 as the RX 580 and then the RX 590, which weren’t particularly well received. Nevertheless, the Polaris architecture that powered the RX 480 and other 400 and 500 GPUs certainly made its mark despite the odds, and re-established AMD as a relevant company for desktop GPUs.

Radeon RX 5700 XT

Good graphics, promising prospects

Although AMD had gained ground with the RX 400 series, those were only midrange GPUs; there was no RX 490 doing battle with the GTX 1080. AMD did challenge Nvidia with its RX Vega 56 and 64 cards in 2017, but those fell flat. RX Vega had mediocre value: the 64 model was only as fast as the 1080 and significantly slower than the 1080 Ti, and to top it all off, these GPUs were hot and loud. In early 2019, AMD tried again with the Radeon VII (which was based on data-center silicon), but it was a repeat of the original Vega GPUs: mediocre value, unimpressive performance, hot and loud.

However, Nvidia was also struggling because its new RTX 20 series wasn’t very impressive, particularly for the price. For example, the GTX 1080 was 33% faster than the GTX 980 and launched for only $50 more, whereas the RTX 2080 was just 11% faster than the GTX 1080 and launched for $200 more. Ray tracing and A.I. upscaling technology in just a handful of games simply weren’t worth the price at the time.

It was a good opportunity for AMD to counterattack with the RX 5000 series. Codenamed Navi, it was based on the new RDNA architecture and utilized TSMC’s 7nm node. Similar to the RX 480, the 5700 XT at $449 and 5700 at $379 weren’t supposed to be high end GPUs, but aimed just below at the upper midrange, specifically at Nvidia’s RTX 2060 and RTX 2070 GPUs. In our review, we would have found that the new 5000 series GPUs beat the 2060 and the 2070 just as AMD planned. That is, we would have if Nvidia didn’t launch three brand-new GPUs on literally the same day the 5000 series came out. The new RTX 2060 Super and the RTX 2070 Super were faster and cheaper than the old models, and in our review the 5700 XT ended up getting second place, albeit at a decent price.

But it wouldn’t be an AMD GPU without at least one scandal. Just days before the RX 5000 series launched, Nvidia announced the RTX Super GPUs, and the 2060 Super and the 2070 Super were priced very aggressively. In order to keep RX 5000 competitive, AMD cut the 5700 XT’s price to $399 and the 5700’s to $349, and pretty much everyone agreed this was the right move. And that should have been the end of it.

Except that wasn’t the end of it, because Radeon VP Scott Herkelman tried to claim this was some kind of mastermind chess move, where the RX 5000 price cut was planned from the beginning so that Nvidia would be tempted into selling its Super GPUs at a low price just to have worse value anyway. Except, as Extremetech pointed out, AMD wouldn’t have cut prices if Nvidia didn’t price the Super GPUs the way it did. It’s more likely AMD cut prices because RX 5000 would have looked bad at the old prices.

Although it didn’t set the world on fire, the 5700 XT proved AMD had potential. It had good performance and was just about 250 mm2. By comparison, Nvidia’s flagship RTX 2080 Ti was three times as large and was only about 50% faster. If AMD could just make a bigger GPU, it could be the first Radeon card to beat Nvidia’s flagship since the R9 290X.

Radeon RX 6800 XT

Radeon returns to the high end

With the RX 5700 XT and the brand-new RDNA architecture, AMD found itself in a very good position. The company had made it to 7nm before Nvidia, and the new RDNA architecture despite its immaturity was much better than old GCN. The next thing to do was obvious: make a big, powerful gaming GPU. In early 2020, AMD announced RDNA 2, which would power Navi 2X GPUs, including the rumored “Big Navi” chip. RDNA 2 was 50% more efficient than the original RDNA architecture, which was not only impressive since RDNA 2 still used the 7nm node, but was also crucial for making a powerful, high-end GPU that wasn’t hot and loud.

2020 promised to be a great year for GPUs as both AMD and Nvidia would be launching its next-generation GPUs, and “Big Navi” was rumored to mark AMD’s return to the high end. As it turns out, 2020 was a terrible year in general, but at least there was still a GPU showdown to look forward to: the RTX 30 series versus the RX 6000 series.

Although the flagships for this generation were Nvidia’s RTX 3090 and AMD’s RX 6900 XT, at $1499 and $999 respectively, these GPUs weren’t super interesting to most gamers. The real fight was between the RTX 3080 and the RX 6800 XT, which had MSRPs of $699 and $649, respectively.

Two months after the RTX 3080 came out, the RX 6800 XT finally arrived in late 2020, and to everyone’s relief, it was a good GPU. The 6800 XT didn’t crush the 3080, but most reviewers such as Techspot found it was a little faster at 1080p and 1440p, and just a bit behind at 4K. At $50 less, the 6800 XT was the first good alternative to high-end Nvidia GPUs in years. Sure, it didn’t have DLSS and it wasn’t very good at ray tracing, but that wasn’t a dealbreaker for most gamers.

Unfortunately, as good as the RX 6800 XT was when launched only a year and a half ago, there was a new problem to contend with: You couldn’t buy one. The dreaded GPU shortage had totally upended the GPU market, and whether you wanted a 6800 XT or a 3080, it was basically impossible to find any GPU for a reasonable price. This not only put a serious damper on AMD’s return to the high end, but made buying any GPU very painful.

At the time of writing, the GPU shortage has mostly subsided, with AMD GPUs selling for about $50 more than MSRP rather than hundreds of dollars more, while Nvidia GPUs tend to sell for $100 more than MSRP. That makes not only the 6800 XT competitive, but pretty much the entire RX 6000 series.

That’s a solid win for AMD.

So what’s next?

As far as we can tell, AMD is not slowing down for even a moment. AMD promises its next-generation RDNA 3 architecture will deliver yet another 50% efficiency improvement, which is extremely impressive to see three generations in a row. RX 7000 GPUs based on RDNA 3 are slated to launch in late 2022, and AMD has confirmed the upcoming GPUs will make use of chiplets, the technology which allowed AMD to dominate desktop CPUs from 2019 to 2021.

It’s hard to say how much better RX 7000 will be over RX 6000, but if the claims are to be believed, it could be very impressive indeed. If AMD gives RX 7000 a good price, perhaps we’ll have to add it to this list in the months ahead.

Editors' Recommendations

- All of the exciting new GPUs still coming in 2024

- The sad reality of AMD’s next-gen GPUs comes into view

- 5 GPUs you should buy instead of the RTX 4070

- The one AMD 3D V-Cache processor you should avoid at all costs

- AMD is making the CPU more and more obsolete in gaming