Spiders may typically have eight eyes, but very few have good eyesight. Instead, they rely on vibrations to navigate and seek out their prey. That’s, in essence, what a spiderweb is: A giant, enormously complicated crisscross of tripwires that can tell a spider exactly when — and where — some delicious bit of food has landed on its web.

As humans, we’re not exactly privy to what that experience would feel like. But Markus Buehler, a Professor of Engineering at the Massachusetts Institute of Technology, has come up with an intriguing way of simulating it — and it involves laser scanning, virtual reality, and the medium of music.

“We have given the silent spiderweb, especially often-overlooked cobwebs, a voice, and shed light on their innate intricate structural complexity,” Buehler told Digital Trends. “[We] made it audible by developing an interactive musical instrument that lets us explore sonically how the spiderweb sounds like as it is being built.”

According to this creation, being on a spiderweb sounds a whole lot like an orchestra of wind chimes, scored by John Carpenter. No wonder spiders perpetually seem on edge!

A world of vibrations

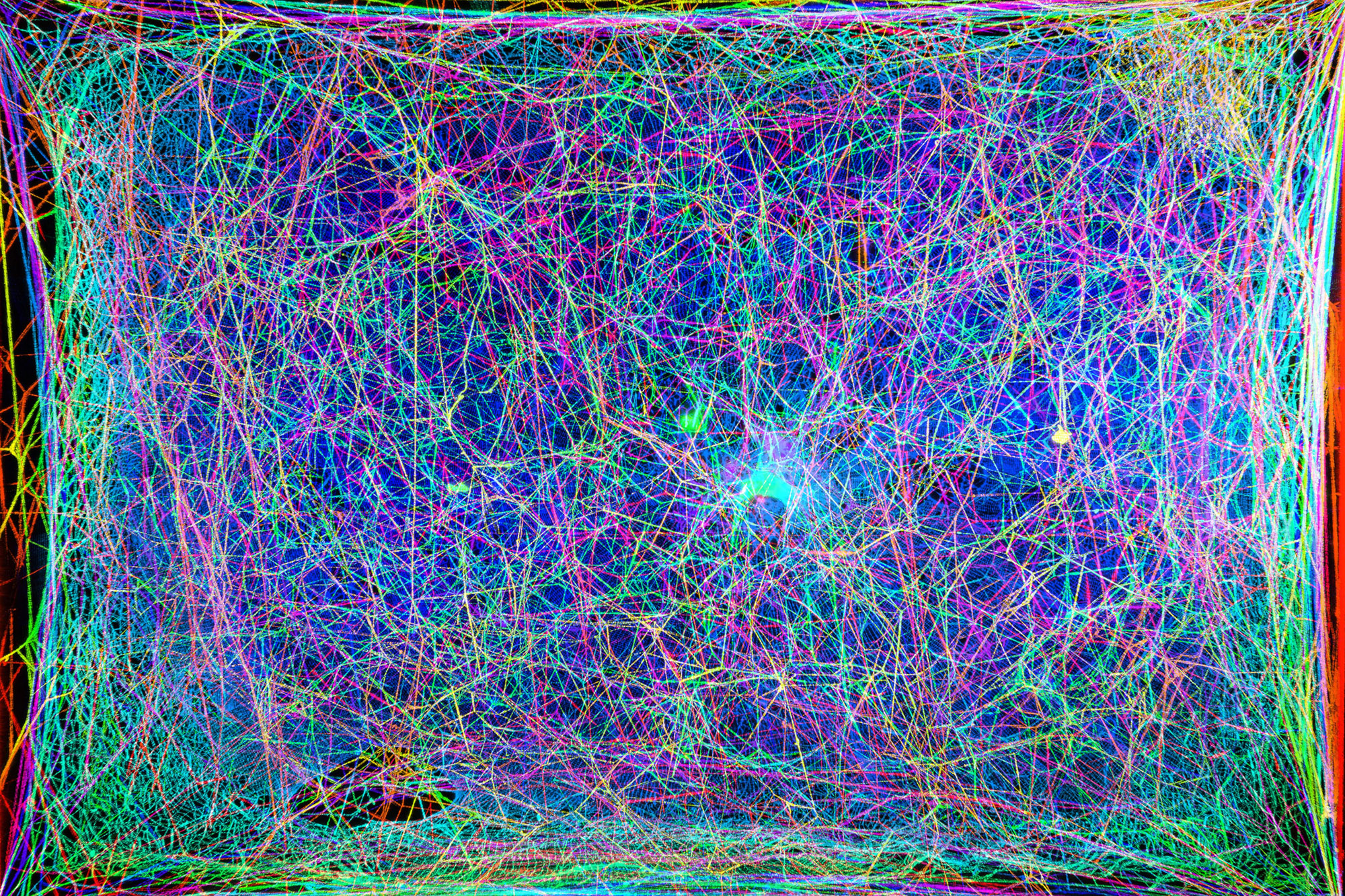

Whether it’s Vivaldi’s “Four Seasons” quarter of string concertos or Mozart’s use of the Fibonacci Sequence, plenty of musicians have been inspired by nature over the years. But none have turned the sounds of the natural world into music with quite the scientific fidelity of Buehler’s creation. In order to create his biofidelic soundscape, Buehler and fellow researchers used a laser scanner to record details of every line of webbing in a spiderweb. Not content with scanning the regular boring webs of any old spider, they focused their efforts on the extremely complex web of the Cyrtophora citricola, also called the tropical tent-web spider.

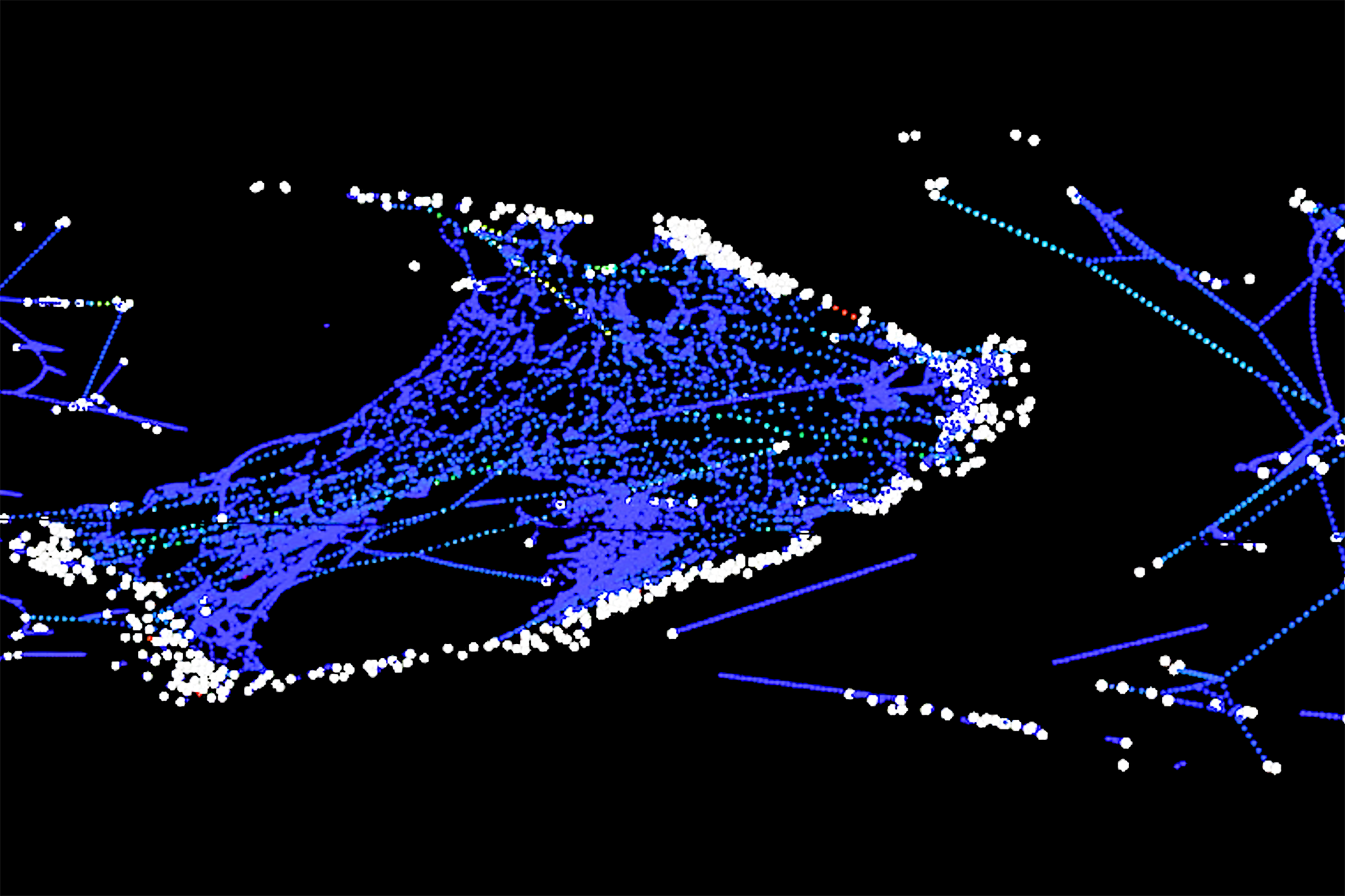

Using the sheet laser scanner, they took measurements of these webs as a series of images, which they then used an algorithm to reassemble as a three-dimensional model on computer, containing the exact location of each filament and connection point of the web. The researchers then calculated the “vibrational patterns” for each of the strings on the web, basing this on the physics study of string vibrations to understand resonance. This was a complex job; not just because of the massive number of strands, but because each strand has a different vibrational frequency according to its size and elasticity. Next, they aggregated these to reflect the sonic qualities across the entire web.

Thanks to the 3D model, the researchers (or anyone who dons the necessary headset) are able to dip into VR to explore different sections of the web, giving the user a sense of what the audioscape might sound like in each different area. The results are a weird blend of the artistic and the scientific — and Buehler wouldn’t have it any other way.

“[I’m interested in] pushing the way we create sound and music, by looking to natural phenomena to solicit vibrational patterns for new types of instruments rather than relying on the tradition of ‘harmonic’ tuning like equal temperament,” he said. “We have [so far] done this for proteins and folding, cracks and fractures in materials, and also for spiderwebs. In each case, [we’re] seeking to assess the innate vibrational patterns of these living materials to work out new ways to conceptualize musical structures.”

Spider music

Buehler said that the work is “driven by my long interest to push the boundary of how and why we create music — to use the universality of vibrations in nature as a direct compositional tool.” He noted that: “As a composer of experimental and classical and electronic music, my artistic work explores the creation of new forms of musical expression — such as those derived from biological materials and living systems — as a means to better understand the underlying science and mathematics.”

It’s not just about creating unusual electronic music, though. Buehler noted that this work can be useful for students of the natural world who can better understand the geometries behind prey catching in the spider kingdom. It could also be used as a novel way to help design new materials, by applying this same process to help design by sound. “We find that opening up the brain to process more than just the raw data, but using images and sound as creative means, can be powerful in understanding biological methods — and to be creative as an engineer when it comes to out-of-the-box ideas,” he said.

For now, though, it’s enough that someone created a biofidelic spider theme. No, it probably won’t show up in Marvel’s next Spider-Man movie, and it doesn’t have the same relaxing qualities as whale song, but it’s pretty darn neat all the same. Even if it makes the sight of a spider perched on a web, waiting for flies, look a whole lot less peaceful.

Alongside Buehler, other people who contributed to the project included Ian Hattwick, Isabelle Su, Christine Southworth, Evan Ziporyn, and Tomas Saraceno.