Augmented reality (AR) has been in science fiction for decades. Thanks to smartphones, the technology is finally here to make it happen, but it’s been nearly two years since we wrote about the untapped potential of augmented reality and it’s still waiting for a killer application. Still, mobile tech keeps moving toward AR. It feels tailor made for wearable technology, particularly smart glasses. New academic research has given us an insight into the exciting future possibilities for AR browsers, but it also highlights the barriers that must be overcome.

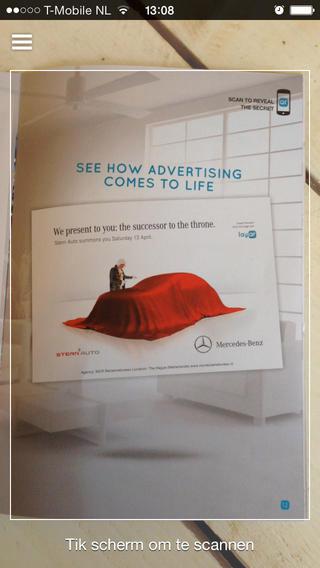

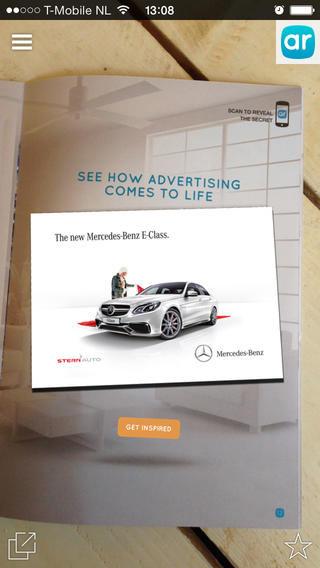

“The really compelling applications that are going to make AR take off just haven’t been built yet,” explained Jules White, Assistant Professor of Computer Science at Vanderbilt University. “Instead what we’ve got primarily in the market are gimmicky advertising experiences.”

Every object around you could give you its data

“AR is about showing you information that’s relevant to the physical objects around you” says White.

The app could determine that your tire pressure is low, or recognize that a part is dangerously worn.

Maybe you’re out shopping in a store and you want to buy some light bulbs, but you have no idea which LED bulbs are the best. You take out your smartphone, tell it you want something under $20 at 40 watts with at least a 4 star review, and it highlights the relevant options that are on the shelf, right there on your screen.

Volkswagen is already doing it

The potential for augmented reality is huge, and it’s finally beginning to come to life.

The Volkswagen MARTA app (Mobile Augmented Reality Technical Assistance) is designed to display superimposed information on the vehicle and enable technicians to complete a repair or guide them as they service a vehicle. It only covers one car right now and it’s for professional mechanics, but it makes so much sense that it’s bound to spread. There’s also the Audi AR app, which is like a user manual, enabling users to view the car using their iPhone and get data pop-ups on what they’re looking at.

“What really takes AR to the next level is when you have the domain expert and computer vision expert working together to build applications that are helpful to specific industries,” White explains.

Professor White served as guest editor of the latest special issue from the Proceedings of the IEEE on Applications of Augmented Reality and he says they’ve had interest from various industries including medical, automotive, engineering, maintenance and repair, construction, and retail.

This could pave a route toward a killer AR app for the general public. Maybe it will come out of retail. As White suggests, “they could start with inventory management and planogram management and then realize it’s wonderful and go ahead and allow customers to tap into the same database of knowledge.”

Augmenting the real world better

Current AR browsers, like Wikitude and Layar, tend to superimpose images and text in flat blocks. The position is based on geo tags and the data that’s offered simply floats on top of the real world. Quality and usability are poor and there’s no standardized platform.

What if you could see images, text, or even video content overlaid on the surface of a building?

What if you could see the images, text, or even video content, and it was actually overlaid on the surface of a building? You could have a virtual vacancy sign on a hotel with review data, or a giant post-it on the 34th floor of a nearby building with details of the meeting you’re due to have there. That’s an idea put forward in “Next-Generation Augmented Reality Browsers: Rich, Seamless, and Adaptive,” one of the papers from the IEEE journal.

This involves mapping environments and spatial awareness, essentially advances in computer vision. The interesting thing about this is that it doesn’t necessarily have to rely on GPS or other sensors in the phone. Computer vision algorithms and rapidly improving camera hardware are actually more accurate than consumer grade GPS, even when it’s assisted by Wi-Fi.

If you have a database of images then a server in the cloud can analyze imagery from your phone and compare it to what’s in the database and potentially determine your position down to the millimeter.

Logistically it’s a difficult proposition, but that didn’t stop Google developing street view. The level of detail you need depends on what you’re trying to do. Building the database could be expensive, and you’d need to cover all the angles and perspectives people are likely to view an environment from if you want fine granularity. Then you have to consider that the real world changes all the time, although White suggests crowdsourcing could solve this issue, “when AR reaches that critical mass and everyone is using it all the time it will be easy because people are taking photos of everything constantly.”

Identifying objects better than Google Goggles

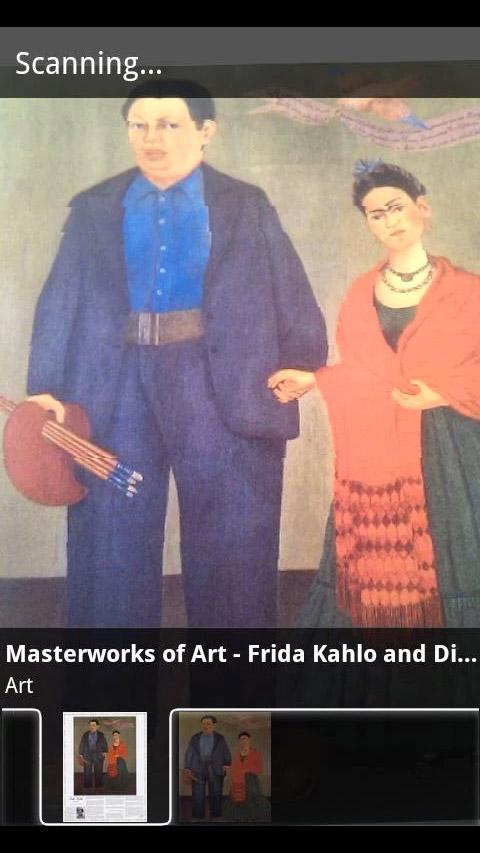

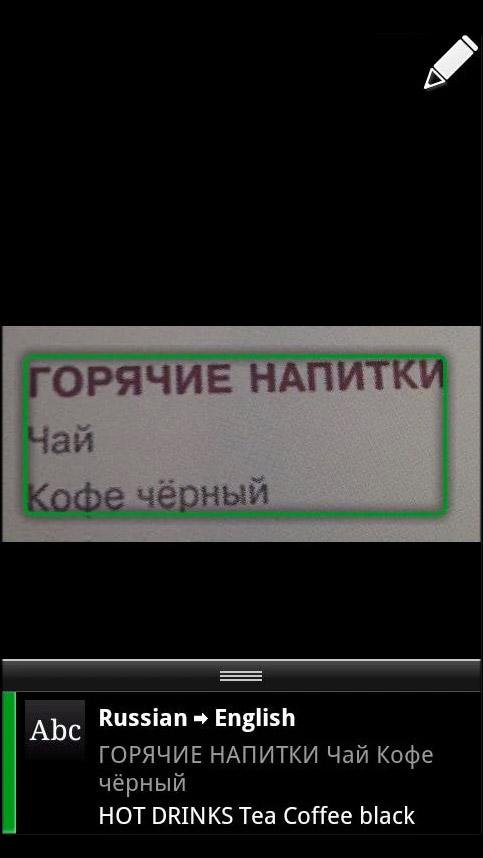

There’s another side to this and that’s the Google Goggles approach, where your AR browser is able to recognize objects and products and offer up useful information about them. We’ve moved beyond the old approach of scanning a single object with something like the barcode scanner app.

As White explains “AR ups the bandwidth considerably, rather than scan one image you can take a picture of the entire aisle” and this could produce a listing of the top 10 products out of 1,000 that you can filter by review score, price, and whether it’s in stock. It’s not just about telling you what things are, it’s about providing “intelligence to help you make a decision.”

What about smart glasses?

There’s no doubt that smart glasses could be a major driver of AR, but are they ready for prime time? The technical capabilities of smart glasses right now would preclude things like real-time video compositing, which could be possible on a smartphone. There are also issues with the interface that voice controls can’t always solve. If you were looking at an engine, for example, you’d want to be able to point to a specific part, especially if you don’t know what it is.

We’re already seeing concepts for how a new kind of interface might work. Samsung recently patented an AR keyboard that would project onto your fingers, and eye tracking is improving all the time.

White suggests “the more immersive the experiences become, the more natural we expect the interface to be.”

That level of immersion also raises safety concerns. What if an AR display or animation distracts you from a real world event and causes an accident? Then there’s cyber security. What if someone hacks into your AR display and alters information? It’s going to take some time to work these things out.

White acknowledges the part that projects like Google Glass have played in raising the profile of AR, but he feels mobile phones and tablets will drive AR in the short term because “it doesn’t require people to change their behavior … people are used to using their phone cameras to take pictures of things around them that interest them.”

How close are we, really?

The “Next-Generation Augmented Reality Browsers: Rich, Seamless, and Adaptive” paper suggests that for AR to hit the mainstream it needs to be easier to register points of interest. Content needs to move beyond static images/text to video, audio, and even 3D models and animations, and we need better user interfaces to help us interact and extract useful information.

“While more work remains to be done, we believe these challenges can be addressed and resolved within a reasonably near future, hopefully leading to large-scale adoption of AR,” concludes the paper.

We’re sold. If someone with deep enough pockets puts the resources into it, then we could start seeing some truly awesome AR applications. Hopefully two years from now someone will and we can talk about how successful AR has become.