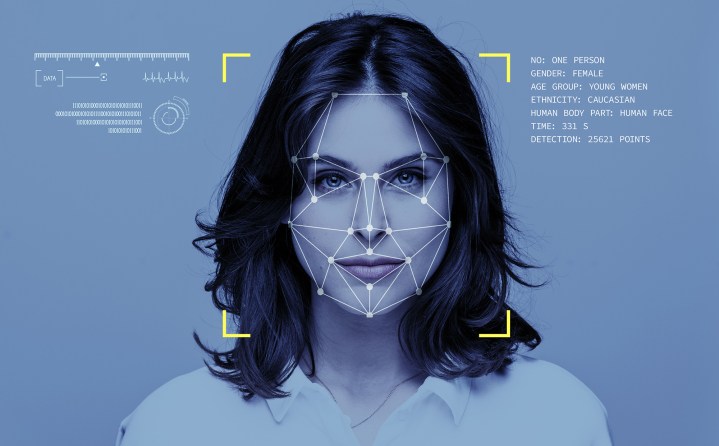

The latest example of Silicon Valley’s hubris is the facial-recognition app Clearview AI. The small startup’s app is so powerful that someone could walk up to you on the street, snap your photo, and quickly find out your name, address, and phone number, according to a report in The New York Times.

This technology probably sounds like a great idea to two types of people: Law enforcement and creeps. Advocates worry this kind of facial-recognition technology could be a boon to stalkers, people with a history of domestic abuse, and anyone else who would want to find out everything about you for a nefarious purpose.

“As the news of this app spread, women everywhere sighed,” said Jo O’Reilly, a privacy advocate with the U.K.-based ProPrivacy, in a statement to Digital Trends. “Once again, women’s safety both online and in real life has come second place to the desire of tech startups to create — and monetize — ever more invasive technology.”

Clearview works, in part, by scraping publicly available data from popular social media sites like Facebook, Twitter, YouTube, and Venmo, among others. A Facebook company spokesman told Digital Trends that “scraping

A new tool for abusers

Stalking has long been an issue for women online, and that’s without a tool that can track someone down based only on their face. For now, Clearview claims it is only selling its services to law enforcement, which has given truly glowing reviews of the technology. “They are strangely silent, however, about the fact that, in the wrong hands, this software could be utilized to harass and stalk almost anyone,” O’Reilly said. “This would be impossible to prevent if the software was made available to the public — a scenario that Clearview investors have acknowledged is possible.”

“Any tech that you develop that will be consumed by humans, you have to think of the flaws,” said Crystal Justice, chief marketing and development officer for the National Domestic Violence Hotline . “Abusers are always looking for the next tool in their toolbox.”

The hotline began reporting on digital abuse as its own specific category in 2015, and has seen a stark increase in the numbers since then, especially among young people. Justice told Digital Trends that the hotline received 371,000 contacts in 2018, and 15 percent of those were digital abuse complaints. On average nationally, one in four women and one in seven men are survivors of domestic violence, according to the hotline’s numbers.

“Survivors make up such a big part of our population,” Justice said. “Tech companies must consider the survivor experience when designing their apps.”

An ongoing problem

This is a persistent pattern with much disruptive tech in this day and age. When Uber launched in 2009, the tech seemed too cool to be true. No longer were we slaves to taxi industries or incompetent public transit in our respective cities.

Of course, Uber’s model also directly flew in the face of the conventional wisdom we had been taught as children: Don’t get in cars with strangers. Ten years and several thousand lawsuits over sexual assault later, it would seem there was a reason that was the conventional wisdom. For a lot of people, getting in a stranger’s car isn’t safe, even when you’re paying to be there.

But this element of thinking about safety, or of having to move through the world constantly being concerned for your physical safety, as many women and people of minority genders must, doesn’t seem to have been on the minds of the (mostly white) men who founded tech companies like Uber, like Twitter, like so many others. They just wanted to make something cool that would change the world. The idea that it would be used for nefarious purposes didn’t cross their minds until it was too late.

“Making these things for public consumption puts survivors at risk, and we need to think about the unintended consequences.”

Privacy and safety need to be built into an app from the beginning, said Rachel Gibson, senior technology safety specialist for the National Network to End Domestic Violence. “It’s one thing that something is built with good intent, but we know that tech can be misused,” Gibson told Digital Trends. “We think of it as new tech, old behavior. People were still being raped in taxis [before Uber], but we have to think about how technology these days can facilitate old behaviors like stalking and assault.”

Something like Clearview, Gibson said, while it has its uses for law enforcement, certainly brings up questions as to how or why it would be useful to the general public. “Making these things for public consumption puts survivors at risk, and we need to think about the unintended consequences,” she said.

Justice did say she sees the conversation about technology’s role in public safety changing. “The discourse has shifted since MeToo,” she said. “And it’s about time, too. This is not a new issue. We’re not seeing an increase in domestic violence necessarily, we’re seeing that it’s finally being discussed. And now we can include in the complex elements of how tech is part of that.”

Gibson also said she had had conversations with several “major tech companies” (although she wouldn’t specify which), and they said that they were now “trying to be mindful” of building for privacy and security.

Clearview gave Digital Trends a short statement, saying they “take these concerns seriously, and have no plans for a commercial release.” In a blog post, the company emphasized that their product was not available to the public, and that it would be made available “only to law enforcement agencies and select security professionals.”

If you believe that you or someone you know is suffering from intimate partner abuse, contact the National Domestic Violence Hotline 24/7 at 1−800−799−7233.

Editors' Recommendations

- YouTube tells creators to start labeling ‘realistic’ AI content

- Tom Hanks warns of AI-generated ad using his likeness

- Spotify using AI to clone and translate podcasters’ voices

- AI drone beats pro drone racers at their own game

- Snapchat hopes its new AI selfie feature will be a moneymaker