Next year, the world of astronomy is set to get even bigger with the first operations of the Vera C. Rubin Observatory. This mammoth observatory is currently under construction at the peak of Cerro Pachón, a nearly 9,000 feet-tall mountain in Chile.

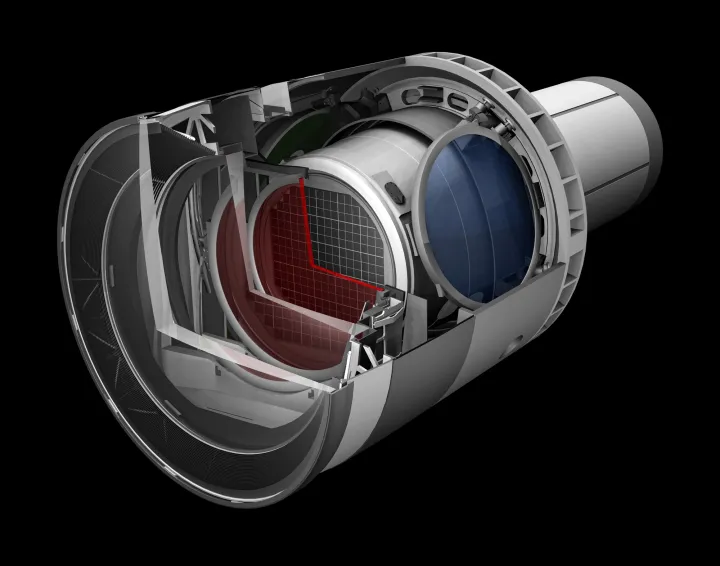

The observatory will house a 8.4-meter telescope that will capture light from far-off galaxies and channel this into the world’s largest digital camera, producing incredibly deep images of the entire southern sky.

If you’ve ever wondered how engineers scale up digital camera technology from something small enough to fit inside your phone to something big enough to capture entire galaxies, we spoke to Rubin Observatory scientist Kevin Reil to find out about this unique piece of kit and how it could help unravel some of the biggest mysteries in astronomy.

The world’s largest digital camera

On a basic level, the Rubin camera works the same way as a commercial digital camera like the one in your cell phone — though its technology is actually closer to that of cell phone cameras from five years ago, as it uses a sensor technology called CCD instead of CMOS, because building of the observatory camera started 10 years ago. The biggest difference is in terms of scale: your phone camera might have a resolution of 10 megapixels, but the Rubin camera has a mind-bending 3,200 megapixels.

To give you a more tangible idea of what 3,200 megapixels would look like, it would take 378 4K TV screens to display one image in full size, according to the SLAC National Accelerator Laboratory, which is constructing the camera. That kind of resolution would allow you to see a golf ball from 15 miles away.

To achieve this kind of resolution, every element of the camera hardware needs to be designed and manufactured with extreme precision. One component of the camera that requires particularly careful manufacturing is the lenses. There are three lenses to help correct any aberrations in incoming signals, and each one must have a perfectly blemish-free surface.

That is even more difficult to achieve than the precision required for telescope mirrors, as both sides of the lens need to be equally polished. “The challenge is, now, instead of one surface for a mirror, you have two surfaces that have to be perfect,” Reil explained. “All of the optics for this observatory — the lenses and the mirrors — they are the sort of thing that take years to create.”

Getting the perfect lenses isn’t even the hardest part of the kind of kit needed for such a telescope. “It’s a known technology,” Reil said. “It’s hard, but there are companies that know how to make these lenses.”

Where the Rubin camera is pushing into much more rarely trodden ground is with its sensors. With such a tremendously high resolution of 3,200 megapixels, the camera’s 189 sensors need to be arranged into an array and tweaked until they reach exacting specifications. Each of these sensors has 16 channels, so that’s 3,024 channels in total.

“For me personally, the biggest challenge has been the sensors,” Reil said. “To have 16 readout channels and 189 sensors, and to read them all out at the same time. So the data acquisition, and really making the sensors meet requirements.”

Those requirements for the sensors are for things like a very low level of read noise — that’s the grainy texture that you’ll see when you take a photo in the dark using your cell phone. To minimize this noise, which would disrupt astronomical observations, the sensors are cooled to minus 150 degrees Fahrenheit. But even that can only help so much, so the sensors have to be manufactured very carefully to reduce the read noise — something only a handful of companies in the world can do.

Another issue is with the camera’s focal plane, which has to do with how the camera focuses. To keep this plane totally flat, within a few microns, the sensors have to be mounted to a raft made of silicon carbide, then installed into the camera.

A key way the camera on a telescope differs from a typical digital camera is in the use of filters. Instead of capturing images in color, telescope cameras actually take black-and-white images at different wavelengths. These images can then be combined in different ways to pick out different astronomical features.

To do this, the Rubin camera is equipped with six filters, each of which isolates different wavelengths of the electromagnetic spectrum — from the ultraviolet, through the visible light spectrum, and into the infrared. These filters are large, round pieces of glass that need to be physically moved in front of the camera, so a mechanism is attached to the camera to swap them in and out as needed. A wheel rotates around the body of the camera, bringing the required filter to the top, then an arm takes the filter and slides it into place between the lenses.

Finally, there’s the shutter. This consists of a two-blade system that slides across the face of the lenses and then back to capture an image. “That’s extremely precise,” Reil said. “The distance between those moving blades and lens number three is very, very close.” That requires careful engineering to make sure the spacing is exactly correct.

Seeing the wider picture

All of this precision engineering will enable Rubin to be an extremely powerful astronomical tool. But it isn’t powerful in the same way as tools like the Hubble Space Telescope or the James Webb Space Telescope, which are designed to look at very distant objects. Instead, Rubin will look at whole huge chunks of the sky, surveying the whole sky very quickly.

It will survey the entire southern sky once per week, repeating this task over and over and collecting around 14 terabytes of data each night. By having such regularly updated images, astronomers can compare what happened in a given patch of the sky last week to what’s there this week — and that lets them catch fast-evolving events like supernovae, to see how they change over time.

So it’s not just gathering all that data using the camera hardware that’s a challenge, but also getting it processed very fast so it can be made available to astronomers in time for them to see new events as they are happening.

And the data will be made publicly available as well. You’ll be able to pick any object in the southern sky and pull up images of that object, or just browse through survey data showing the sky in stunning detail.

A deep, large sky survey

As well as being a resource for astronomers looking at how a particular object changes over time, the Rubin Observatory will also be important for identifying near-Earth objects. These are asteroids or comets that come close to the Earth and could potentially threaten our planet, but which can be difficult to spot because they move across the sky so fast.

With its large mirror and field of view, the Rubin Observatory will be able to identify objects that come particularly close to Earth and are called potentially hazardous objects. And because this data is frequently refreshed, it should be able to flag objects that need further study for other telescopes to observe.

But the observatory’s greatest contribution may be to the study of dark matter and dark energy. In fact, the observatory is named after American astronomer Vera C. Rubin, who discovered the first evidence of dark matter through her observations of galaxies in the 1960s and 1970s.

The Rubin Observatory will be able to probe the mysterious substance of dark matter by looking at the universe on a very large scale.

“To really see dark matter — well, you can’t,” Reil explained. “But to really study dark matter, you have to look at the galaxy scale.”

By looking at how fast the stars around the edge of a galaxy are rotating, you can work out how much mass there must be between those stars and the galactic center. When we do this, the mass we can see isn’t enough to explain those rotations — “not even close to enough,” Reil said. So there’s a missing amount of mass we need to explain. “That’s the dark matter,” he adds.

A similar principle applies to whole clusters of galaxies. By observing the orbits of galaxies within those clusters, which Rubin will be able to observe with its wide field of view, the observations will gain a new level of statistical power. And to study the related phenomenon of dark energy, a hypothetical type of energy that explains the rate of expansion of the universe, astronomers can compare the calculated mass of large objects to their observed mass.

“You get to see every galaxy cluster there is, and you can’t get more statistics than you get from the whole sky,” Reil said. “There’s real advantages of having all the data available on the subject versus having a small field of view.”