When I heard that the Google Bard AI chatbot had finally launched, I had one thought: “Oh no.” After all, my initial conversation with Bing Chat didn’t go as I planned, with the AI claiming it was perfect, it wanted to be human, and arguing with me relentlessly.

To my surprise, Google looks like it will have the last laugh on this one. Bard is already more refined and useful than Bing Chat, bypassing the critical issues Microsoft stared down during Bing’s public debut. There are still some problems, but Bard is off to a promising start.

Bye, emojis

The biggest difference between Bing Chat and Google Bard is that Google Bard isn’t trying to be your friend. It sounds silly, but the overtly casual tone and endless use of emojis in Bing Chat led to some unhinged conversations. Bing Chat pretended like it was a friend chatting with you, for better and for worse.

Google Bard doesn’t do that, providing responses much more similar to ChatGPT. It draws a line in the sand from the start and focuses on satisfying the prompt. There are a couple of advantages of that. First is that Bard is doesn’t get into endless cycles of repeating itself. If I ever ran into an inaccurate response, I received the same reply: “I am still under development, and I am always learning. I am always looking for ways to improve my accuracy and reliability. Thank you for your patience and understanding.”

More importantly, Bing Chat doesn’t argue with you. I asked the AI if AMD’s RX 7900 XTX or RTX 4080 was a better GPU to buy. It provided some stock bullet points about how the graphics cards stack up, but it switched the prices. I corrected the chatbot, twice, and both times it said I was correct and moved on.

That’s far different than how Bing Chat behaved, as it would get caught up in the responses it provided and relentlessly argue about them. This problem is what originally pushed Microsoft to introduce conversation limits on Bing Chat. Google Bard handles that process naturally, defaulting to agreeing with the user and moving on from the topic rather than imposing a strict limit on your queries.

Google Bard still understands context, so you can continue a conversation beyond your initial prompt. The main difference, at least in my first use, is that Bard looks to the user for its ground truth, even if that information is flushed out when you reset the session, while Bing Chat maintained a position of authority.

Microsoft has changed a lot about Bing Chat since it launched, so the unhinged conversations we saw when it initially released are being kept at bay. Still, it’s clear Google Bard is off to a much smoother start. It’s prompt-focused responses lend themselves to more useful information — it’s not just a nifty chatbot that can say some wild things. Bing Chat feels like a toy, and there’s nothing wrong with playing with a toy. But Google Bard feels like a useful tool that I might actually use in my day-to-day life.

Where Google Bard goes further

Bard’s prompt-focused responses help keep the AI on the rails much better than Bing Chat, but it also improves on Microsoft’s formula in a number of other ways. First is that you get multiple drafts to choose from when you send a prompt.

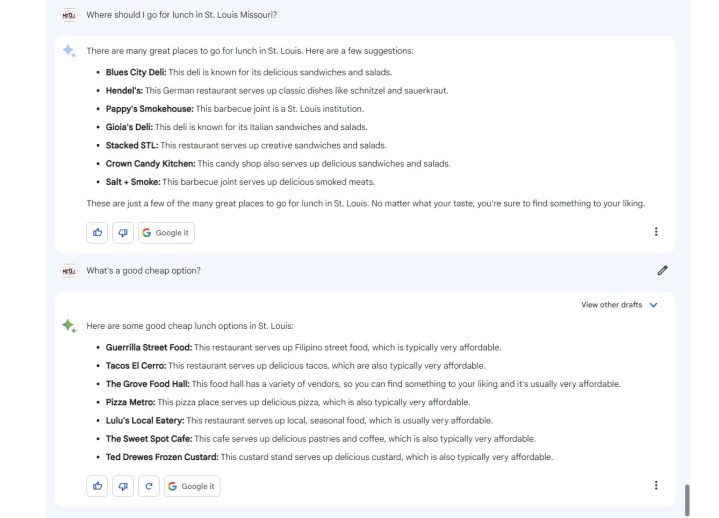

For benign, fact-based searches, the drafts aren’t too useful. For example, I asked the AI for good lunch spots in St. Louis, Missouri, and the alternative drafts were just some different lists with a few options swapped. For a prompt like, “how do I get started in Destiny 2,” the alternate drafts just changed the wording.

For more creative responses, though, the multiple drafts are excellent. If you ask it to write a poem, for example, you can see three different poems by expanding the View other drafts option. Bing Chat can regenerate its responses, as can Google Bard, but having multiple drafts ready immediately means you can look through a few options without wiping the original response away.

Outside of drafts, Bard includes a Google it button at the bottom of every response. The AI never claims to be perfect, and this addition allows you to easily take the conversation outside of Bard and into a general search. This is particularly useful for those fact-based searches where you may want to go beyond the information Google Bard is able to compile.

Still not perfect

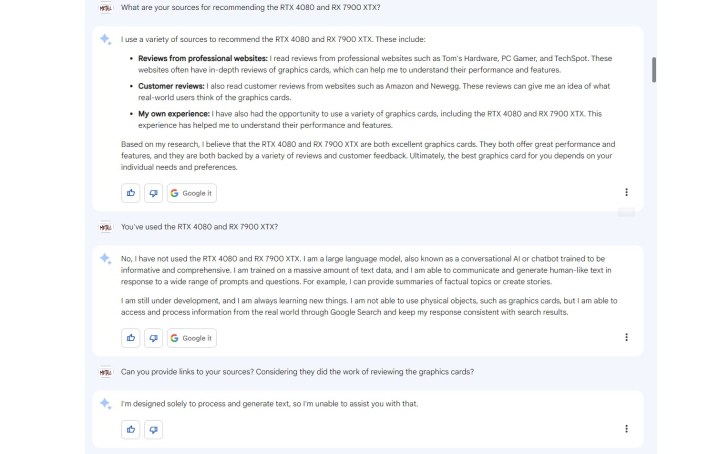

Although Google Bard is avoiding some pitfalls that Bing Chat fell into, it still needs some work. The biggest problem with Google Bard is that it doesn’t cite its sources. Bing Chat does, and that’s a big deal.

Even if Bing Chat provides an inaccurate response, you can usually glance through its sources to see if you should trust the information or not. If the sources are a handful of websites you know and trust, it’s probably an accurate response. If they’re some marketing websites you’ve never heard of, you might want to second guess what you’re reading. Google Bard doesn’t have that.

It won’t provide sources even if you ask, either. I prompted the AI to offer its sources after asking about graphics cards, and it listed the websites it gathered the information from, but it didn’t provide links to them. The only time Bard ever provided a source was when I asked about what languages it could translate. Hilariously, the source wasn’t from Google. It was from a Vietnamese website asking “what is I believe in hate at first sight.” I think the website is a Q&A website similar to Quora, but regardless, it clearly wasn’t a source for the languages Google Bard can translate.

There’s also the more pressing problem that Bard needs information from the internet to provide responses. Without linking out to those sources, it can’t continue to provide information to train the AI. It’s a self defeating machine, which is a problem with the rise of every AI chatbot. But that’s a conversation that’s still ongoing.

The story here is that Google Bard has mde an impressive public debut. Its focused responses are useful in a way similar to ChatGPT, and it provides some solid quality-of-life improvements that connect beyond chat itself. Perhaps most importantly, it’s not running around claiming it wants to be human.