The push for open source isn’t limited just to software; in fact, there’s quite a big push for open-source hardware as well. Founded in 2015, the RISC-V (pronounced “risk five”) Foundation, now RISC-V International, and its instruction set architecture (or ISA), also called RISC-V, is leading the charge for open-source hardware. Although the history of RISC-V goes back over a few decades, it’s still a relatively unknown property. But just because RISC-V isn’t that important now doesn’t mean it won’t be important in the future.

What does RISC-V mean?

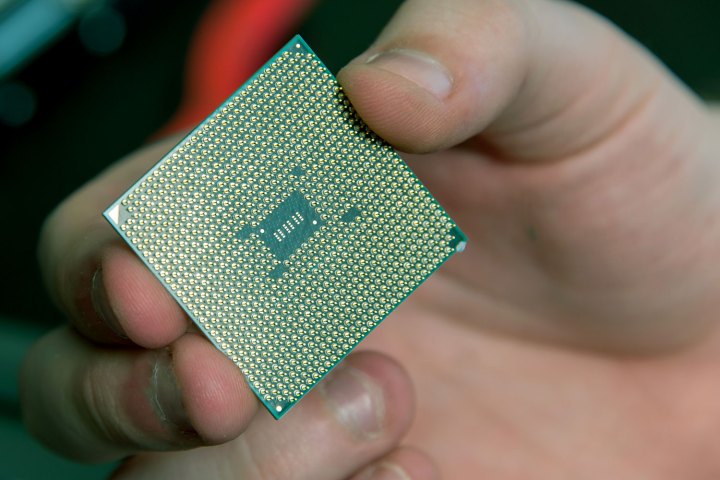

Most likely coined by computer scientist David Patterson, RISC stands for reduced instruction set computer, which basically means instructions are designed to be simpler and easier to process, unlike a complex instruction set computer or CISC-based processor, such as any x86 CPU like a Ryzen 9 5950X or a Core i9-12900KS.

So what does the -V mean? It’s the Roman numeral for five, and RISC-V is sort of the fifth-generation design. RISC-I and -II were developed in 1981, but RISC-III and -IV technically never existed. These names are retroactively applied to SOAR, which came out in 1984, and SPUR, which came out in 1988. RISC-V considers itself to be the successor to the 1988 architecture.

The origins of RISC-V

RISC-V started out as a research project of UC Berkeley’s Parallel Computing Laboratory (or the Par Lab), whose Director was David Patterson. Professor Krste Asanović and his graduate students Yunsup Lee and Andrew Waterman started designing RISC-V in May 2010. They were able to receive funding through the Par Lab, which was largely funded by Intel and Microsoft.

In 2011, the first RISC-V chip taped out, which is to say they finally had a prototype. In 2014, Asanović and Patterson published a paper championing the cause of open-source hardware and argued not only that a RISC-based ISA would be ideal, but specifically RISC-V. The first RISC-V workshop was held in 2015, and later that year, 36 companies founded the RISC-V Foundation. Founding members include Nvidia, Google, IBM, and Qualcomm. In 2020, the RISC-V Foundation became RISC-V International.

The case for open-source hardware

The fundamental concept behind RISC-V is that “technology does not persist in isolation.” It’s simply more efficient and effective if every company could just adhere to one standard instead of making everything they need from scratch. The RISC-V Foundation argues that the world needs RISC-V to avoid “fragmentation, forking, and the establishment of multiple standards.” RISC-V, despite the name, isn’t about RISC versus CISC, but open source versus closed source.

RISC-V stands in opposition against closed-source ISAs in general, but especially x86 and ARM. Originally, x86 was Intel’s sole intellectual property, and later AMD acquired the full rights to x86 after a lengthy court battle that ended in 1995. x86 CPUs represent most of the desktop, laptop, and server markets, so RISC-V International is understandably concerned about a proprietary Intel and AMD duopoly.

But why is ARM in the same league as Intel and AMD according to RISC-V International? Isn’t ARM much more open than x86? Well, yes, but it’s not open source. To use ARM the architecture, you have to pay ARM the company licensing fees. Companies that have an ARM license can only modify the core rather than design a brand new one. This hasn’t prevented companies like Apple and Qualcomm from making impressive ARM processors, but nevertheless, a company has to pay millions of dollars just to have the privilege of using some cores that can only be added to, not fundamentally altered.

What is the future of RISC-V?

One of the primary focuses of RISC-V International is the growth of the Internet of Things (IoT) market. In its introductory presentation, RISC-V predicts that by 2030, there will be 50 billion actively used IoT devices, about double what we have today. The presentation also gives another statistic: 40% of application-specific integrated circuits (better known as ASICs) will be designed by OEMs by 2025. More companies are going to want to custom design their own chips for their own products, and RISC-V International argues its RISC-V architecture will enable that.

RISC-V International is not only optimistic about the future but is getting more and more optimistic. In mid-2019, a study predicted over 60 billion RISC-V cores would be on the market by 2025. In late 2020, this was revised to nearly 80 billion, or about 14% of the entire CPU market.

RISC-V talks a big game, but they have at least some reason to. It cites a 2020 study that concluded about 23% of ASIC and field-programmable grid array (or FPGA) projects incorporated at least one RISC-V processor. Seagate, the hard drive manufacturer, is developing two RISC-V chips for hard drives and claims they outperform other processors by triple, though they never defined what the “other processors” were. Alibaba is using RISC-V in its Xuantie 910 server CPU.

At the beginning of 2020, there were about 600 RISC-V members. Today, there are over 2,400. From 2022 to 2025, the RISC-V Foundation expects the “proliferation of RISC-V CPUs” across the whole CPU market: High-performance computing, the cloud, IoT, enterprise, consumer, etc. It’s unclear what’s in store for RISC-V after 2025 since all the roadmaps seem to stop at that point, but if things go well for RISC-V in the next three years, we can expect more optimistic roadmaps for the years beyond 2025.

Can RISC-V compete with x86?

It’s too early to call. The RISC-V Foundation certainly doesn’t expect to overrun x86 titans Intel and AMD before 2025 as they expect to capture just 14% of the market, which is significant but still leaves the x86 duopoly mostly intact. While RISC-V has great aspirations, today the ISA is still not very popular, with x86 dominating servers, laptops, and desktops, while ARM dominates mobile devices and is making inroads into x86-dominated segments.

We can’t say one way or another where RISC-V will end up. What we can say is that there’s a great deal of momentum for RISC-V and open-source hardware, and that energy has to go somewhere.