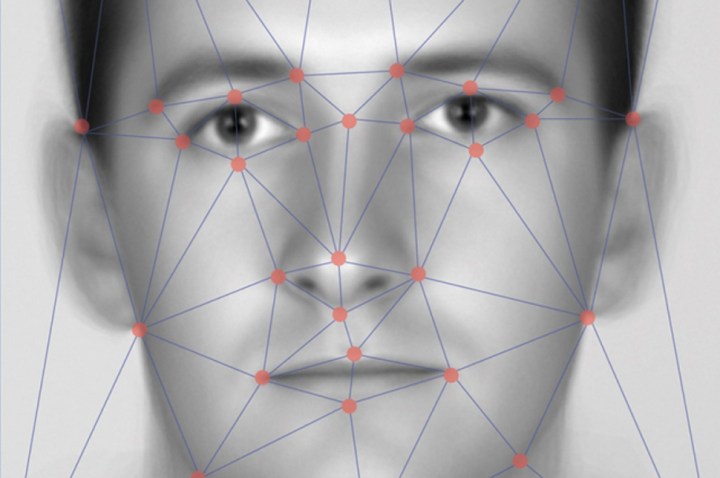

This second reaction is the thinking behind HyperFace Camouflage, a project created by Berlin-based artist and technologist Adam Harvey. The goal? To create guerilla printing patterns for clothing, capable of confusing computers. These patterns look like they have eyes, mouths, and other facial features designed to cause all manner of AI headaches.

“I’m exploiting an algorithmic preference for [machines picking out] the most face-like shape and presenting it in a way that appears less like a face to a person and more like a face to a computer vision system,” Harvey told Digital Trends. “The intended outcome is that you could trick a facial recognition system into capturing a image region that does not contain your true face. This doesn’t make you invisible but can work in very specific situations.”

With the project still in development, it’s not completely clear what those “specific situations” may be, but given that facial recognition is being used for everything from security systems to targeted advertising, there are bound to be a plethora of use cases for it to go to work on.

The project was first presented at event organized by the Chaos Computer Club in Hamburg, Germany, at the end of last year, and its textile print is set to launch at the Sundance Film Festival on January 16.

Harvey hopes that the project will help raise awareness about the potentially creepiness of a technology that will play a growing role in our lives in the years to come.

“People’s faces are vulnerable to surveillance in a different way than digital communication,” he said. “Faces are expressive and sometimes revealing. There has never been a moment in history when you’ve been able to analyze a millions faces a second without ever telling someone why or what you’re looking at specifically. Often, it’s the case that your face is being used for marketing purposes or even in research studies without your awareness or consent. … The curse of this internet celebrity status is that you will inevitably end up in someone’s dataset. Here we can see the roots of AI bias take hold as this ‘celebrity’ data molds the future of how faces are understood, or not, by machines.”