Smartphones may have smaller sensors and lenses than DSLRs, but what the cameras in our pockets lack in hardware, they can (sometimes) make up for with software and computing power — as well as tweaks to that tiny hardware. Portrait mode is now a common feature on most smartphones, but what exactly does it do? Is it just another catchphrase to get you to pay more money for a phone, or does portrait mode really capture better photos?

While the technology behind the camera feature differs between smartphones, portrait mode is a form of computational photography that helps smartphone snapshots look a bit more like they came from a high-end camera. Here’s how portrait mode works.

What is portrait mode?

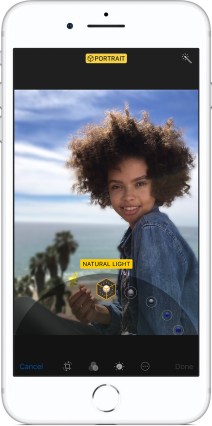

Portrait mode is a feature in quite a few smartphones that helps you take better pictures of people by capturing a sharp face and a nicely blurred background. It’s specifically made to improve close-up photos of one person — hence the name portrait (though you can use it for objects). Portrait mode started as one of the scene modes you typically find on a digital camera, but now the feature has been adapted to smartphone photography. While both the portrait mode on a digital camera and the portrait mode on a smartphone may share the same name, they vary drastically in howthe image is taken.

Portrait mode is a form of computational photography that artificially applies blur to the background.

When first offered as a photo mode on digital cameras, portrait mode helped novice photographers take better portraits by adjusting the camera settings. The aperture, or the opening in the lens, widens to blur the background. A blurred background draws the eye to the subject and eliminates distractions in the background, so wide apertures are popular for professionally shot portraits. Over time, additional optimization was added in, such as improving the processing to make faces even clearer by eliminating red eye and adjusting the autofocus.

A smartphone camera, however, cannot adjust those settings to take a better portrait. For starters, the aperture on most smartphone cameras is fixed, so you can’t actually change it (the Samsung Galaxy S9 and S9 Plus are notable exceptions). Even on the few models that allow for an adjustable aperture, however, the lens and sensor inside a smartphone camera are too small to create the blur that DSLRs or mirrorless cameras are capable of capturing.

Smartphone manufacturers can’t fit a giant DLSR sensor inside a smartphone and still have it fit in your pocket — but smartphones have more computing power than a DSLR. That difference is what powers a smartphone’s portrait mode. On a smartphone, portrait mode is a form of computational photography that artificially applies blur to the background to mimic the background blur of a DSLR. Smartphone portrait mode relies on a mix of software and hardware.

Blurring the background of a photo is tougher than it sounds — for starters, the smartphone needs to be able to tell what’s the background and what’s not in order to keep the face sharp. Different manufacturers have found different ways to determine what to blur and what to leave sharp, which means that, brand by brand, smartphone portrait modes can look considerably different.

If you really want to learn how portrait mode works on modern smartphones, it’s important to understand the tricks phone manufacturers use to enable this feature.

How phones make portrait mode work

Apple was widely recognized as fueling the portrait mode trend when it introduced the feature on the iPhone 7 Plus in 2017. It proved popular, and a number of other manufacturers began releasing phones that included their own portrait optimization. Let’s break down the different methods used to blur that background:

Two-lens depth mapping

Two-lens depth mapping

The original smartphone portrait mode requires a dual-lens camera. Depth mapping uses both the telephoto lens and the wide angle lens on a smartphone to examine the same visual field and compare notes. These two different viewpoints can work together to create a “depth map,” or an estimation of how far away objects in the shot are. With the depth map, the smartphone can then determine what’s the background and what’s not.

Combined with face detection technology, the phone runs the image through a blurring algorithm that attempts to blur the background and highlight the face. This is the technique used by the latest Samsung Galaxy phones, and the latest iPhone devices, including the iPhone X.

Pixel splitting

Rather than requiring two lenses, pixel splitting requires a specific type of camera sensor and just one lens. Instead of creating a depth map using two different lenses, this technique creates a depth map from two different sides of the same pixel. In smartphones with dual-pixel autofocus, like the Google Pixel 2, a single pixel actually has two photodiodes. Just like with a dual-lens camera, the software can compare the slightly different views from both sides of the pixel to create a depth map. The camera can then use the depth map without needing to consult an image from a separate lens and apply blur. Phones with this capability can take portrait mode photos from the front-facing camera as well, which may be better suited for your selfies.

Software-only portrait mode

Ideally, portrait mode uses a mix of hardware and software for the best results. But what if you can’t control the hardware? Apps designed to work on multiple devices use artificial intelligence and facial recognition to guess where the person is and where the background is. The result isn’t as accurate as methods that use both hardware and software because there’s no depth map, but this type of portrait mode is available from a wider range of smartphones. Instagram has a version of portrait mode inside the built-in camera that it calls Focus.

What’s the difference?

Because the software and algorithms used for these techniques can differ, you can still wind up with different results for any of these methods. How different? The Unlockr took a look for us, comparing the Galaxy Note 8, iPhone X, Huawei Mate 10 Pro, and Pixel 2 XL. Note the shading and background differences for these shots, and you can see that there are differences in how portrait mode performs on different models.

Along with getting different results, different devices will have distinct features. Becuase the Pixel 2 doesn’t need two lenses, the portrait mode works with both the rear-facing and front-facing cameras. The iPhone X can also use portrait mode on the front facing camera, but by using a 3D depth map from Face ID.

The bottom line on portrait mode

The best portrait modes are images from interchangeable lens cameras because of the aperture control and larger sensors — no other portraits will look as good. However, computational photography allows smartphones to come closer than ever before by artificially blurring the background. The Pixel 2 XL appears to take the best portrait photos, thanks to intelligent software and one of the best smartphone cameras around. The iPhone X also performs well, although it has a tendency, in our experience, to darken images a little.

While portrait mode differs between models, the biggest difference is between a phone with portrait mode, and one without. Without the hardware to create a depth map, portrait modes can’t quite reach the same level of realistic background blur. If you snap a lot of images of people, portrait mode makes for a dramatic improvement in photo quality, even coming from a smartphone. That difference is enough to warrant opting for a particular phone.

Editors' Recommendations

- How to share iPhone photos with Android devices

- How to create the ‘VSCO Girl’ look and other fun edits with filter-based apps

- How to manage multiple Instagram accounts

Two-lens depth mapping

Two-lens depth mapping