The Times profiled an 18-year-old Ukrainian woman named “Luba Dovzhenko” in March to illustrate life under siege. She, the article claimed, studied journalism, spoke “bad English,” and began carrying a weapon after the Russian invasion.

The issue, however, was that Dovhenko doesn’t exist in real life, and the story was taken down shortly after it was published.

Luba Dovhenko was a fake online persona engineered to capitalize on the growing interest in Ukraine-Russia war stories on Twitter and gain a large following. The account not only never tweeted before March, but it also had a different username, and the updates it had been tweeting, which is what possibly drew The Times’ attention, had been ripped off from other genuine profiles. The most damning evidence of her fraud, however, was right there in her face.

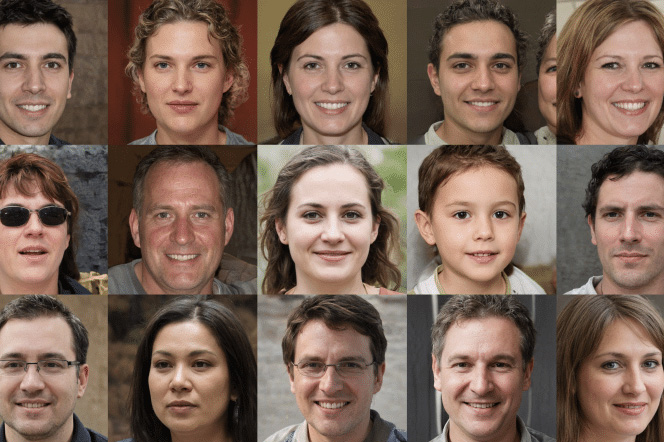

In Dovhenko’s profile picture, some of her hair strands were detached from the rest of her head, a few eyelashes were missing, and most importantly, her eyes were strikingly centered. They were all telltale signs of an artificial face coughed up by an AI algorithm.

The facial feature positioning isn't the only anomaly in @lubadovzhenko1's profile pic; not the detached hair in the lower right portion of the image and the partially missing eyelashes (among other things). pic.twitter.com/UPuvAQh4LZ

— Conspirador Norteño (@conspirator0) March 31, 2022

Dovhenko’s face was fabricated by the tech behind deepfakes, an increasingly mainstream technique that allows anyone to superimpose a face over another person’s in a video and is employed for everything from revenge porn to manipulating world leaders’ speeches. And by feeding such algorithms millions of pictures of real people, they can be repurposed to create lifelike faces like Dovhenko’s out of thin air. It’s a growing problem that’s making the fight against misinformation even more difficult.

An army of AI-generated fake faces

Over the last few years, as social networks crack down on faceless, anonymous trolls, AI has armed malicious actors and bots with an invaluable weapon: the ability to appear alarmingly authentic. Unlike before, when trolls simply ripped real faces off the internet and anyone could unmask them by reverse-imaging their profile picture, it’s practically impossible for someone to do the same for AI-generated photos because they’re fresh and unique. And even upon closer inspection, most people can’t tell the difference.

Dr. Sophie Nightingale, a psychology professor at the U.K.’s Lancaster University, found that people have just a 50% chance of spotting an AI-synthesized face, and many even considered them more trustworthy than real ones. The means for anyone to access “synthetic content without specialized knowledge of Photoshop or CGI,” she told Digital Trends, “creates a significantly larger threat for nefarious uses than previous technologies.”

What makes these faces so elusive and highly realistic, says Yassine Mekdad, a cybersecurity researcher at the University of Florida, whose model to spot AI-generated pictures has a 95.2% accuracy, is that their programming (known as a Generative Adversarial Network) uses two opposing neural networks that work against each other in order to improve an image. One (G, generator) is tasked with generating the fake images and misleading the other, while the second (D, discriminator) learns to tell apart the first’s results from real faces. This “zero-sum game” between the two allows the generator to produce “indistinguishable images.”

And AI-generated faces have indeed taken over the internet at a breakneck pace. Apart from accounts like Dovhenko’s that use synthesized personas to rack up a following, this technology has lately powered much more alarming campaigns.

When Google fired an AI ethics researcher, Timnit Gebru, in 2020 for publishing a paper that highlighted bias in the company’s algorithms, a network of bots with AI-generated faces, who claimed they used to work in Google’s AI research division, cropped up across social networks and ambushed anyone who spoke in Gebru’s favor. Similar activities by countries like China have been detected promoting government narratives.

On a cursory Twitter review, it didn’t take me long to find several anti-vaxxers, pro-Russians, and more — all hiding behind a computer-generated face to push their agendas and attack anyone standing in their way. Though Twitter and Facebook regularly take down such botnets, they don’t have a framework to tackle individual trolls with a synthetic face even though the former’s misleading and deceptive identities policy “prohibits impersonation of individuals, groups, or organizations to mislead, confuse, or deceive others, nor use a fake identity in a manner that disrupts the experience of others.” This is why when I reported the profiles I encountered, I was informed they didn’t violate any policies.

Sensity, an AI-based fraud solutions company, estimates that about 0.2% to 0.7% of people on popular social networks use computer-generated photos. That doesn’t seem like much on its own, but for Facebook (2.9 billion users), Instagram (1.4 billion users,) and Twitter (300 million users), it means millions of bots and actors that potentially could be part of disinformation campaigns.

The match percentage of an AI-generated face detector Chrome extension by V7 Labs corroborated Sensity’s figures. Its CEO, Alberto Rizzoli, claims that on average, 1% of the photos people upload are flagged as fake.

The fake face marketplace

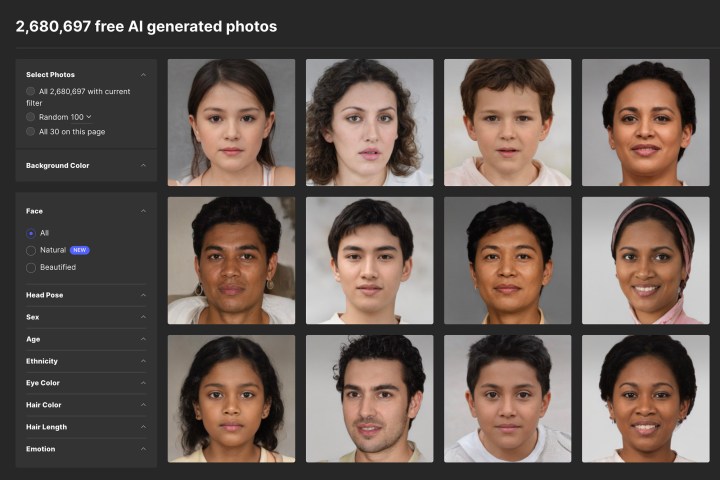

Part of why AI-generated photos have proliferated so rapidly is how easy it is to get them. On platforms like Generated Photos, anyone can acquire hundreds of thousands of high-res fake faces for a couple of bucks, and for people who need a few for one-off purposes like personal smear campaigns, they can download them from websites such as thispersondoesnotexist.com, which auto-generates a new synthetic face every time you reload it.

These websites have made life especially challenging for people like Benjamin Strick, the investigations director at the U.K.’s Centre for Information Resilience, whose team spends hours every day tracking and analyzing online deceptive content.

“If you roll [auto-generative technologies] into a package of fake-faced profiles, working in a fake startup (through thisstartupdoesnotexist.com),” Strick told Digital Trends, “there’s a recipe for social engineering and a base for very deceptive practices which can be setup within a matter of minutes.”

Ivan Braun, the founder of Generated Photos, argues that it’s not all bad, though. He contends that GAN photos have plenty of positive use cases — like anonymizing faces in Google Maps’ street view and simulating virtual worlds in gaming — and that’s what the platform promotes. If someone is in the business of misleading people, Braun says he hopes his platform’s antifraud defenses will be able to detect the harmful activities, and that eventually social networks will be able to filter out generated photos from authentic ones.

But regulating AI-based generative tech is tricky, too, since it also powers countless valuable services, including that latest filter on Snapchat and Zoom’s smart lighting features. Sensity CEO Giorgio Patrini agrees that banning services like Generated Photos is impractical to stem the rise of AI-generated faces. Instead, there’s an urgent need for more proactive approaches from platforms.

Until that happens, the adoption of synthetic media will continue to erode trust in public institutions like governments and journalism, says Tyler Williams, the director of investigations at Graphika, a social network analysis firm that has uncovered some of the most extensive campaigns involving fake personas. And a crucial element in fighting against the misuse of such technologies, Williams adds, is “a media literacy curriculum starting from a young age and source verification training.”

How to spot an AI-generated face?

Lucky for you, there are a few surefire ways to tell if a face is artificially created. The thing to remember here is that these faces are conjured up simply by blending tons of photos. So though the actual face will look real, you’ll find plenty of clues on the edges: The ear shapes or the earrings might not match, hair strands might be flying all over the places, and the eyeglass trim may be odd — the list goes on. The most common giveaway is that when you cycle through a few fake faces, all of their eyes will be in the exact same position: in the center of the screen. And you can test with the “folded train ticket” hack, as demonstrated here by Strick.

Nightingale believes the most significant threat AI-generated photos pose is fueling the “liar’s dividend” — the mere existence of them allows any media to be dismissed as a fake. “If we cannot reason about basic facts of the world around us,” she argues, “then this places our societies and democracies at substantial risk.”

Editors' Recommendations

- Elon Musk setting up generative-AI project at Twitter, report claims

- Meta’s new AI research may boost translations on Facebook, Instagram

- Tech advocates say Trump’s executive order could ruin the internet