AMD has a long history, and after the recent launch of Ryzen 7000 processors, we decided it was time to look back. The company has a storied history filled with many highs, but equally as many lows.

Although AMD is well known for its graphics, it only started selling GPUs in the late 2000s. Its CPU business is much, much older, going all the way back to the 60s. And just as AMD’s graphics are inextricably intertwined with those of Nvidia, AMD’s CPUs are hard to separate from those of its other rival, Intel.

AMD has made six CPUs that not only proved itself as a true competitor to much larger companies but also pushed technology and the world forward.

Athlon

AMD’s first victory

The Athlon 1000 came out in 2000, and AMD was founded in 1969, so before I talk about how AMD beat Intel in the race to 1GHz, I should cover how AMD even got here. Although the company was making its own processors in the ’70s, AMD very quickly assumed the role of a secondary source for Intel chips, which gave AMD the right to use the x86 architecture. Secondary sourcing was important back then because companies that made computers (such as IBM) wanted to be sure they would have enough supply and that it would be delivered promptly. For most of the ’70s and the ’80s, AMD got along by making Intel CPUs.

Eventually, Intel wanted to cut AMD out of the picture and tried to exclude AMD from producing the 80386 (which AMD would eventually clone to make its Am386). Intel’s exclusion of AMD marked the first of many lawsuits between the two companies, and by 1995, the two companies eventually settled the suit, which granted AMD the right to use the x86 architecture. Soon after, AMD launched its first CPU developed without Intel technology: the K5. It competed against not only Intel’s established CPU business but also another company using the x86 architecture, Cyrix. The K5 and the K6 in 1997 provided an alternative to Intel for people on a budget, but couldn’t compete on performance.

That all changed with the K7, also known as Athlon, which in Ancient Greek means “contest” or “arena.” Launching in 1999, the original line of Athlon CPUs didn’t just close the gap with Intel’s Pentium series — AMD beat Intel outright. The new K7 had much higher clock speeds than the old K6 as well as significantly more cache. Anandtech speculated that Intel would need a 700MHz Pentium III to beat AMD’s 650MHz Athlon, but also observed that AMD’s lower prices would keep Athlon competitive, albeit at great expense to AMD.

Over the next few months, AMD and Intel kept one-upping each other by releasing new CPUs, each with a higher clock speed than the last. The race for the highest clock speed wasn’t just for performance; having a higher frequency was good marketing, too. But despite Intel being the far larger company, AMD beat Intel to 1 GHz by launching the Athlon 1000 in March 2000. Intel announced its own 1GHz Pentium III just a couple of days later, and it beat the Athlon 1000, but AMD’s CPU was available at retail much sooner.

The entire Athlon lineup had been a massive upset in the CPU industry, and AMD’s underdog status cemented the Athlon’s legendary reputation almost as soon as it came out. Intel still held the advantage thanks to its massive size and healthy finances, but only a few years ago, AMD was just a company making extra CPUs for Intel. By 2000, AMD had ambitions to take 30% of the entire CPU market.

Athlon 64

AMD defines 64-bit computing

Over the next few years after the race to 1GHz, both AMD and Intel ran into trouble trying to get their next-generation CPUs out. Intel launched its new Pentium 4 CPUs first in late 2000, but these CPUs were hobbled by high prices, reliance on cutting-edge memory and its infamous NetBurst architecture, which was designed for high clock speeds at the expense of power efficiency. Meanwhile, AMD was refining its already existing line of Athlons, which didn’t deliver next-generation levels of performance.

AMD had a good reason to delay, however, as the next generation of AMD CPUs was to introduce 64-bit computing. This was perhaps a far more important goal than 1GHz as 64-bit computing was a massive improvement over 32-bit computing for a variety of tasks. Intel actually beat AMD to the punch with its Itanium server CPUs, but Itanium was extremely flawed because it wasn’t backward compatible with 32-bit software. That gave AMD a big opening to introduce its 64-bit implementation of the x86 architecture: AMD64.

AMD64 finally made its debut in 2003, first in the brand new Opteron series of server CPUs and later in Athlon 64 chips. While Anandtech wasn’t super impressed by the value of AMD’s new desktop CPUs (especially the flagship Athlon 64 FX), the publication appreciated AMD’s performance in 64-bit applications. AMD’s superior implementation of 64-bit was a key reason why Athlon 64 and particularly Opteron sold well. Ultimately, AMD64 provided the basis for x86-64 while Itanium failed to accomplish any of its goals before its discontinuation in 2020 (yes, it survived that long).

But consequently, Intel felt that its CPU business was in mortal danger from AMD. To protect its business interests, Intel relied on something AMD couldn’t match: money. Intel started paying companies like Dell and HP large amounts of cash in the form of rebates and special deals to not use AMD CPUs and to stick with Intel. These arrangements were extremely secretive, and as OEMs became more reliant on cash flow from Intel’s rebates, they became reluctant to ever use AMD chips, because doing so would mean giving up money from Intel.

AMD filed a lawsuit in 2005, but the legal battle wasn’t resolved until 2009 after Intel had been fined by regulatory agencies in several countries and jurisdictions, including a $1.5 billion fine in the EU. The two companies decided to settle the case out of court, and although Intel denied it had ever done anything illegal, it promised to not break the anticompetitive laws in the future. Intel also agreed to pay AMD $1.25 billion as compensation.

That wasn’t the end, either. While the legal battle raged on, Intel continued to cut deals with OEMs, and AMD’s market share quickly began to decline despite being very competitive against Intel’s chips. Opteron in particular suffered, having reached over 25% market share in 2006 but declining to just under 15% a year later. The acquisition of ATI in 2006 also contributed to AMD’s worsening finances, though Intel arguably caused greater damage by denying AMD the chance to not just sell chips but to develop a strong ecosystem within the CPU market. AMD wasn’t out of the fight, but things were looking bad.

Bobcat and Jaguar

AMD’s final refuge

The 2000s was the heyday of the desktop PC, with its high power consumption and equally high performance. The next step of computing wasn’t in the office or at home, though, but on the go.

AMD was also working on a similar CPU, but had different ideas about where it would focus its efforts. The company didn’t want a fight with ARM which had a stranglehold on phones and other devices, so AMD decided to focus on the traditional x86 market — mainly

Although it was late to the party in 2011, Bobcat immediately established itself not just as an Atom competitor, but as an Atom killer. It had pretty much all the media features most people could want in addition to much higher CPU and GPU performance than Atom (an instance where the ATI acquisition was starting to pay off). Power consumption was extremely good on Bobcat as well, and Anandtech observed that AMD “finally had a value offering that it doesn’t have to discount heavily to sell.” Bobcat was a big success for AMD, and it sold in 50 million devices by 2013.

AMD followed up with Jaguar, which was perhaps even more important. It significantly reduced power consumption and had greater performance compared to Bobcat, thanks to using the newer 28nm node from TSMC in addition to several architectural improvements. In Cinebench 11.5, the A4-5000 was 21% faster than AMD’s E-350 in the single-threaded test, and a massive 145% faster in the multi-threaded test. The high performance and power efficiency of Jaguar made it the obvious choice for Sony’s and Microsoft’s next-gen consoles, the PS4 and the Xbox One, cutting Intel and Nvidia out of the console market.

Anandtech summed it up nicely: “In its cost and power band, Jaguar is presently without competition. Intel’s current 32nm Saltwell Atom core is outdated, and nothing from ARM is quick enough. It’s no wonder that both Microsoft and Sony elected to use Jaguar as the base for their next-generation console SoCs, there simply isn’t a better option today… AMD will enjoy a position it hasn’t had in years: a CPU performance advantage.”

These are not the CPUs you’d expect to be on this list after reading about Athlon Classic and Athlon 64 which took the fight to the very top. The thing is, these CPUs were AMD’s lifeline for years and probably saved the company from bankruptcy, which was a real concern in the 2010s.

After Athlon 64, AMD struggled to regain the technological lead. In 2006, Intel introduced its Core architecture, which was significantly better than NetBurst and led to Intel regaining the edge in performance and efficiency. AMD countered with its Phenom CPUs which competed on value thanks to low prices, but that hurt AMD financially. AMD’s GPUs from this time were among the best the company ever launched, but they were so cheap that they made no money. So in 2011, AMD gambled with a brand new architecture called Bulldozer to dig itself out of the hole.

Bulldozer was a disaster. It was barely any better than Phenom and was worse than the competing Sandy Bridge CPUs from Intel — not to mention very hot and power inefficient. It just wasn’t good enough. Anandtech saw the writing on the wall: “We all need AMD to succeed. We’ve seen what happens without a strong AMD as a competitor. We get processors that are artificially limited and severe restrictions on overclocking, particularly at the value end of the segment. We’re denied choice simply because there’s no other alternative. It’s no longer a question of whether AMD will return to the days of the Athlon 64, it simply must. Otherwise you can kiss choice goodbye.”

Bulldozer failed to contain Intel, and that meant Intel got to dictate the direction of the entire x86 CPU market for five long years.

Ryzen 7 1700

AMD is back

From 2011 to 2017, every desktop flagship CPU from Intel was the i7, which always came with four cores and Hyperthreading, and it always launched for $330. CPUs with higher core counts did exist, but were well out of budget for most users. On the other end, mid-range i5 CPUs and low-end i3 CPUs offered the same core counts and prices every generation much like the i7. The pace of improvement in both performance and value had come to a crawl.

In the background, however, AMD was working on a brand new CPU that would change everything. First disclosed in 2015, Zen was a new architecture that would replace not just Bulldozer, but also the Cat cores that had kept AMD afloat during much of the 2010s. Zen promised to be a massive improvement thanks to having 40% more instructions per clock, or IPC, than Bulldozer; simultaneous multi-threading (SMT), essentially the same as Intel’s Hyperthreading; and eight cores.

The level of fanfare for Zen was unprecedented. The reveal event for the first Zen desktop CPUs was fittingly named New Horizon and featured Geoff Keighley of The Game Awards fame in the opening act. When AMD CEO Lisa Su finally came on stage and announced the brand new Ryzen desktop CPUs, she received what was probably the most applause and cheering for a CPU there had ever been. People were eager for the perpetual underdog to finally win and make the scene competitive again.

When the reviews finally came out, Ryzen lived up to the hype. Of the three high-end CPUs AMD launched in early 2017, the Ryzen 7 1700 was arguably the most enticing. It was the same price as Intel’s Core i7-7700K but had eight cores, double that of Intel’s flagship for the same price. In our review, we found that the 1700 excelled at multi-threaded workloads and was behind the 7700K in single threaded tasks and games, but not so far behind as to be another Bulldozer. The 1700 was also a great overclocking chip, making the more expensive 1700X and 1800X pointless.

But Zen didn’t just mean the return of mainstream AMD CPUs. Across the entire stack of the whole CPU industry, AMD was introducing new Zen-powered processors, from the low-end Raven Ridge APUs with Radeon Vega graphics to the high-end desktop Threadripper for professionals to Epyc server CPUs, the first truly competitive AMD server CPUs in years.

Perhaps the greatest innovation from AMD was multi-chip modules or MCM, which saw AMD put multiple CPUs on the same package to get high core counts for HEDT and servers. Its primary benefit was cost efficiency because AMD didn’t need to design multiple chips to cover the entire market of CPUs with more than four cores, not to mention manufacturing multiple small chips rather than one large CPU.

With Zen, AMD was back, and in a big way — but the company wasn’t content with second place anymore. It wanted the gold medal.

Ryzen 9 3950X

Going for the jugular

At the same time, Intel was under pressure from AMD, and it could not have come at a worse time for the blue team. Intel had shot itself in the foot by setting extremely ambitious targets for its 10nm node, and although it was scheduled to launch in 2015, it was nowhere in sight. AMD had planned for an uphill battle against 10nm CPUs in 2019, but they never materialized and Intel was stuck on 14nm for the foreseeable future. This opened the possibility that AMD might do the unthinkable and obtain the process advantage — something the company never had before.

Since AMD expected a tough fight, it wanted to upgrade to a new node as soon as possible, and AMD decided on TSMC’s 7nm node. Producing on 7nm would normally be quite expensive, but AMD already had a way around that problem with MCM, the foundation for a radical new way of building CPUs: chiplets. The idea was to produce only the important parts of the CPU (like the cores) on an advanced node and have everything else produced on an older, cheaper node. To add more cores, just add more chiplets. Things were about to get crazy.

In 2019, AMD launched the 7nm Zen 2 architecture, with the brand new Ryzen 3000 series leading the charge. Whereas Ryzen 1000 and 2000 (a mere refinement of the 1000 series) nipped at Intel’s heels, Ryzen 3000 was indisputably the new leading CPU in almost every single metric. The flagship Ryzen 9 3950X had 16 cores, which was insane at the time when the previous flagship Ryzen 7 2700X had just eight. The Core i9-9900K didn’t stand a chance except in single-threaded applications and gaming, and even then nobody cared that the 9900K could get a few more frames than the 3950X.

This doubling of core count wasn’t limited to desktops though. Threadripper and Epyc both went from 32 cores to 64, and although Intel tried to close the gap with a 56-core Xeon CPU, that didn’t matter because Xeon lost its leadership in power efficiency. Better power efficiency is like gold dust to the data center business, because worse power efficiency means paying more to power the servers and to cool them down. Epyc, being on the 7nm node, had much better power efficiency.

For the first time in over a decade, AMD regained the technological lead. This didn’t mean datacenters and PCs would suddenly switch to AMD, of course. With Intel floundering with its 10nm node though, AMD had ample time to gradually take market share, develop its ecosystem, and ultimately make money like it never had before. But before AMD could truly start to build its new empire, it needed to strike at Intel’s final bastion: mobile.

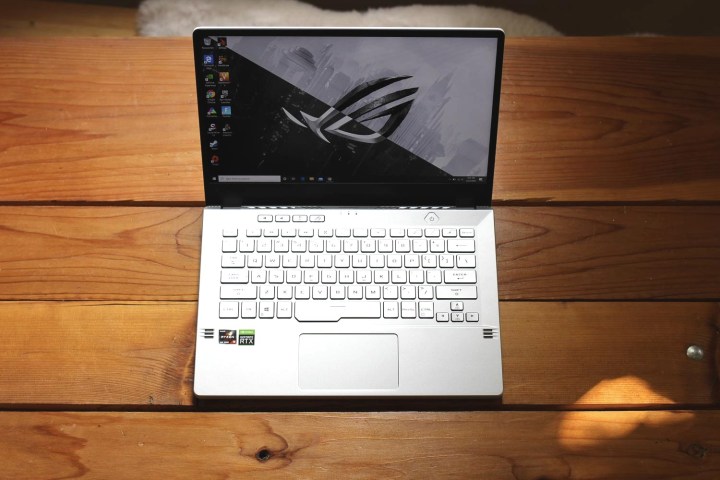

Ryzen 9 4900HS

AMD wept, for there were no more worlds to conquer

AMD’s 7nm APUs were scheduled for early 2020, and although Intel would have liked to use this time to do something to protect its lucrative laptop business, it was difficult to pull something together. 10nm was finally working, but only enough to produce quad-core CPUs, and these quad-cores were hardly any better than their 14nm predecessors. The question wasn’t whether or not AMD would beat Intel, but by how much.

One key difference with AMD’s 7nm APUs was that it didn’t use chiplets like Zen 2 desktop and server chips and was instead a traditional monolithic design. Although chiplets were very good for high-performance chips, they weren’t ideal for chips that targeted low power consumption, especially when idle. The next generation APUs weren’t going to come with crazy core counts, but AMD didn’t need those to win.

Ryzen 4000 launched in early 2020 (just as the COVID-19 pandemic began), and although there weren’t many Ryzen 4000

Equipped with the eight-core flagship Ryzen 9 4900HS, the G14 was unbelievably fast for its size. In our Asus ROG Zephyrus G14 review, we found that the 4900HS could keep up with Intel’s flagship Core i9-9980HK, which was found in much larger devices that had more robust cooling. Not only that, the G14’s battery life was the best of any

Ryzen 4000 completed AMD’s comeback and established the company as having the clear technological edge over Intel. AMD still needed to consolidate its gains and build up its ecosystem, but beating Intel was the crucial first step that made everything else possible. It was a new beginning for AMD, one nobody could have predicted.

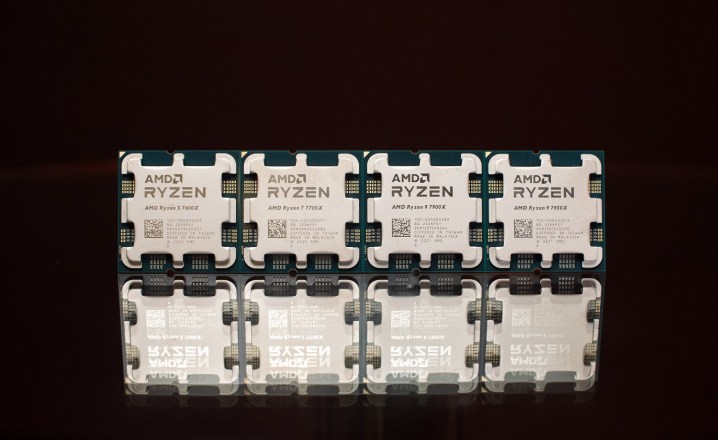

After the 4000 series and beyond

The 4900HS was arguably AMD’s last great CPU, but that’s not to say the company has fallen back on hard times. Its Ryzen 5000 series in late 2020 made significant architectural improvements, but also came with a price increase. It also took AMD a very long time to launch budget Ryzen 5000 parts, some of which were only launched in 2022. Ryzen 6000 also launched in early 2022, but it only featured mobile APUs, and even then it was just a refinement of the previous generation APUs.

To further complicate things, Intel has also made a comeback of its own with its 12th-gen CPUs like the Core i9-12900K, which is arguably just as good as Ryzen 5000, though it was a year late to the party. But AMD still has the upper hand in lower-power

As for the future, things look bright. AMD recently unveiled its Ryzen 7000 series, and it looks pretty good. It seems to be significantly faster than both Ryzen 5000 and Alder Lake and seems well equipped to tackle Intel’s upcoming Raptor Lake CPUs. Pricing also looks acceptable and is certainly an improvement over Ryzen 5000. Of course, we’ll have to wait for the reviews before it can be considered one of the best CPUs AMD has ever made, but I wouldn’t be surprised if it made the list.