Google announced during its I/O developers conference on Wednesday its plans to launch a tool that will distinguish whether images that show up in its search results are AI-generated images.

With the increasing popularity of AI-generated content, there is a need to confirm whether the content is authentic — as in created by humans — or if it has been developed by AI.

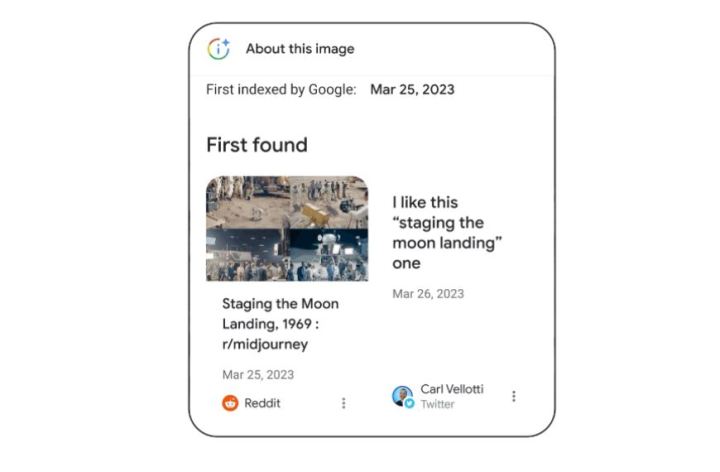

The tool, aptly named About this image, will essentially be exif (exchangeable image file format) data for AI-generated images and will be available this summer. It will allow you to access information about an image to determine its authenticity as human-developed content.

The tool will include information about when the image was first indexed by Google, where it first showed up online, and where else it has been featured, Google said in a blog post.

You will be able to access it by clicking the three dots in the upper-right corner of an image in search results. Alternatively, you can look up these details via Google Lens or by swiping up in the Google app.

Given the way Google image searches already work, this feels like it might actually work.

The company added that the tool has been developed to help battle misinformation online. It quoted a 2022 Poynter study, in which 62% of people stated they believed they’d been subject to false information either daily or weekly.

Some viral moments involving AI-generated images that many believed were real include the image of Pope Francis wearing a fancy white puffer coat and another image of Donald Trump being arrested, both of which were sourced by the AI-image generator Midjourney.

Notably, the About this image tool comes on the heels of Google’s plans to launch its own text-to-image generator, which the company says will feature data so those who view the images can identify them as AI-generated.

Google also noted that other image companies such as Shutterstock and Midjourney plan to soon introduce similar features to their AI-generated content.