Intel’s hotly anticipated Xe Supersampling (XeSS) tech is finally here, and a couple weeks before Intel’s Arc Alchemist GPUs show up. It’s available now in Death Stranding and Shadow of the Tomb Raider, and more games are sure to come. But right now, it’s really difficult to recommend turning XeSS on.

Bugs, lacking performance, and poor image quality have sent XeSS off to a rough start. Although there are glimmers of hope (especially with Arc’s native usage of XeSS), Intel has a lot of work ahead to get XeSS on the level of competing features from AMD and Nvidia.

Spotty performance

Before getting into performance and image quality, it’s important to note that there are upscaling models for XeSS. One is for Intel’s Arc Alchemist GPUs, while the other uses DP4a instructions on GPUs that support them. Both use AI, but the DP4a version can’t do the calculations nearly as fast as Arc’s dedicated XMX cores. Because of that, the DP4a version uses a simpler upscaling model. On Arc GPUs, performance shouldn’t only be better, image quality should be, too.

We don’t have Arc GPUs yet, so I tested the DP4a version. To avoid any confusion, I’ll refer to it as “XeSS Lite” for the remainder of this article.

That’s the most fitting name because XeSS Lite isn’t the best showcase of Intel’s supersampling tech. Death Stranding provided the most consistent experience, and it’s the best point of comparison because it includes Nvidia’s Deep Learning Super Sampling (DLSS) and AMD’s FidelityFX Super Resolution 2.0 (FSR 2.0).

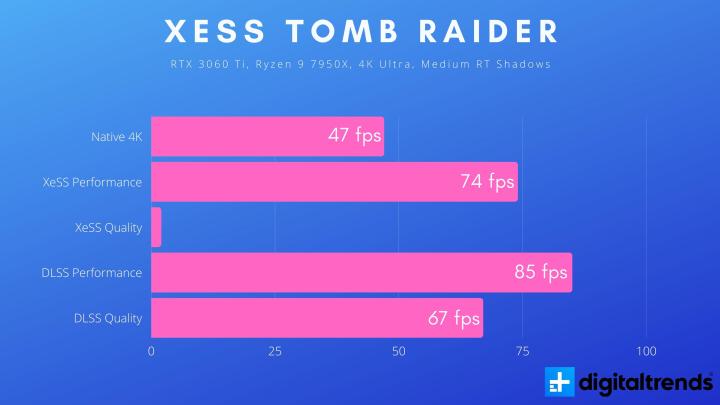

With the RTX 3060 Ti and a Ryzen 9 7950X, XeSS trailed in both its Quality and Performance modes. DLSS is the performance leader, but FSR 2.0 isn’t far behind (about 6% lower in the Performance mode). XeSS in its Performance mode is a full 18% behind DLSS. XeSS is still providing nearly a 40% boost over native resolution, but DLSS and FSR 2.0 are still significantly ahead (71% and 61%, respectively).

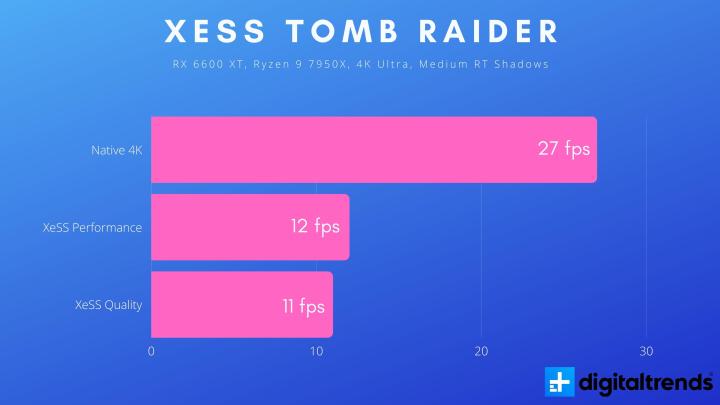

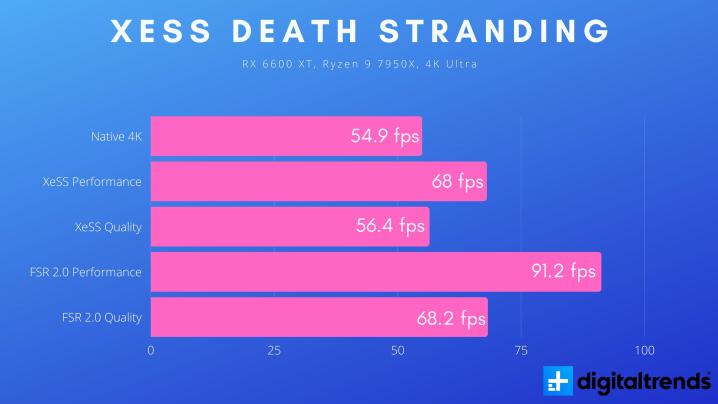

The situation is worse with AMD’s RX 6600 XT. It seems XeSS Lite heavily favors Nvidia’s GPUs at the moment, as XeSS only provided a 24% boost in its Performance mode. That may sound decent, but consider that FSR 2.0 provides a 66% jump. In Quality mode, XeSS provided basically no benefit, with only a 3% increase.

Shadow of the Tomb Raider also shows the disparity between recent Nvidia and AMD GPUs, but I’ll let the charts do the talking on that front. There’s a much bigger story with Shadow of the Tomb Raider. Across both the RX 6600 XT and RTX 3060 Ti, XeSS would consistently break the game.

I was able to finally get the Performance mode to work by setting the game to exclusive fullscreen and turning on XeSS in the launcher (thank goodness this game has a launcher). If I turned on XeSS in the main menu, the game would slow to a slideshow. And in the case of the Quality mode, I couldn’t get a consistent run even with the launcher workaround.

A new update for Shadow of the Tomb Raider reportedly fixes the bug, but we haven’t had a chance to retest yet. For now, make sure to update to the latest version of the game if you want to use XeSS.

I tried out Shadow of the Tomb Raider on my personal rig with an RTX 3080 12GB, and it worked great without the launcher workaround. This is the case for many GPUs, and the update should fix the startup crashes that were occurring for others.

Poor image quality

The performance for XeSS isn’t great right now, but the more disappointing factor is image quality. It lags behind FSR 2.0 and DLSS a bit in the Quality mode, but bumping down to the Performance mode shows just how far behind XeSS is right now in this regard.

Shadow of the Tomb Raider is the best example of that. DLSS looks a bit better, utilizing sharpening to pull out some extra detail on the distant skull below. XeSS falls apart. In Performance mode in Shadow of the Tomb Raider, XeSS looks like you’re simply running at a lower resolution.

- Shadow of the Tomb Raider XeSS Performance comparison (click, drag, resize)

This is zoomed in quite a bit, so the difference isn’t nearly as stark when zoomed out. And the Quality mode holds up decently. It still suffers from the low-res look when zoomed in so much, but the differences are much harder to spot in Quality mode when you’re actually playing the game.

- Shadow of the Tomb Raider XeSS Quality comparison (click, drag, resize)

Death Stranding tells a different story — and largely because it includes FSR 2.0. In Quality mode, FSR 2.0 and native resolution are close, aided a lot by FSR 2.0’s aggressive sharpening. DLSS isn’t quite as sharp, but it still manages to maintain most of the detail on protagonist Sam Porter Bridges. XeSS is a step behind, though it’s not as stark as Shadow of the Tomb Raider. It manages to reproduce details, but they’re not as well-defined. See the hood and shoulder on Bridges and the rock behind him.

- Death Stranding XeSS Quality comparison (click, drag, resize)

Performance mode is where things get interesting. Once again FSR 2.0 is ahead in a still image with its aggressive sharpening, but XeSS and DLSS are almost identical. Performance is behind, and Intel still needs to work with developers for better XeSS implementations. But this showcases that XeSS can be competitive. One day, at least.

- Death Stranding XeSS Performance comparison (click, drag, resize)

Just like with performance, it’s important to keep in mind that this isn’t the full XeSS experience. Without Arc GPUs to test yet, it’s hard to say if XeSS’ image quality will improve once it’s running on the GPUs it was intended to run on. For now, XeSS Lite is behind on image quality, though Death Stranding is proof that it could catch up.

Will XeSS hold up on Arc?

XeSS is built first and foremost for Intel Arc Alchemist, so although these comparisons are useful now, it’s all going to come down to how XeSS can perform once Arc GPUs are here. XeSS Lite still needs some work, especially in Shadow of the Tomb Raider, but Death Stranding is a promising sign that the tech can get there eventually.

Even then, it’s clear that XeSS isn’t the end-all-be-all supersampling tech it was billed as. It’s possible that by trying to do both machine learning and general-purpose supersmapling, XeSS will lose on both fronts. For now, though, we just have to wait until we can test Intel’s GPUs with XeSS.

Editors' Recommendations

- Ghost of Tsushima is a great PC port with one big problem

- Nvidia could flip the script on the RTX 5090

- What to do if your Intel CPU keeps crashing

- No, Intel isn’t blaming motherboard makers for instability issues

- You’ll never guess what this YouTuber built into a PC this time