Probably not. Millions of people have cut the cord from their cable service, instead relying on Netflix, Hulu, and Amazon Prime Video for entertainment. Game consoles have also stepped into the void left by old-fashioned, scheduled programming. There are more gamers than ever, playing longer than ever.

In short, the way people use televisions has changed. Maybe it’s time for the TV too change, too. Nvidia’s Big Format G-Sync Displays (BFGD), which debuted on the show floor at CES, show one possible future for the TV, a future that focuses on gaming, streaming video, and smooth delivery of any content thrown at it.

Just don’t call it a monitor

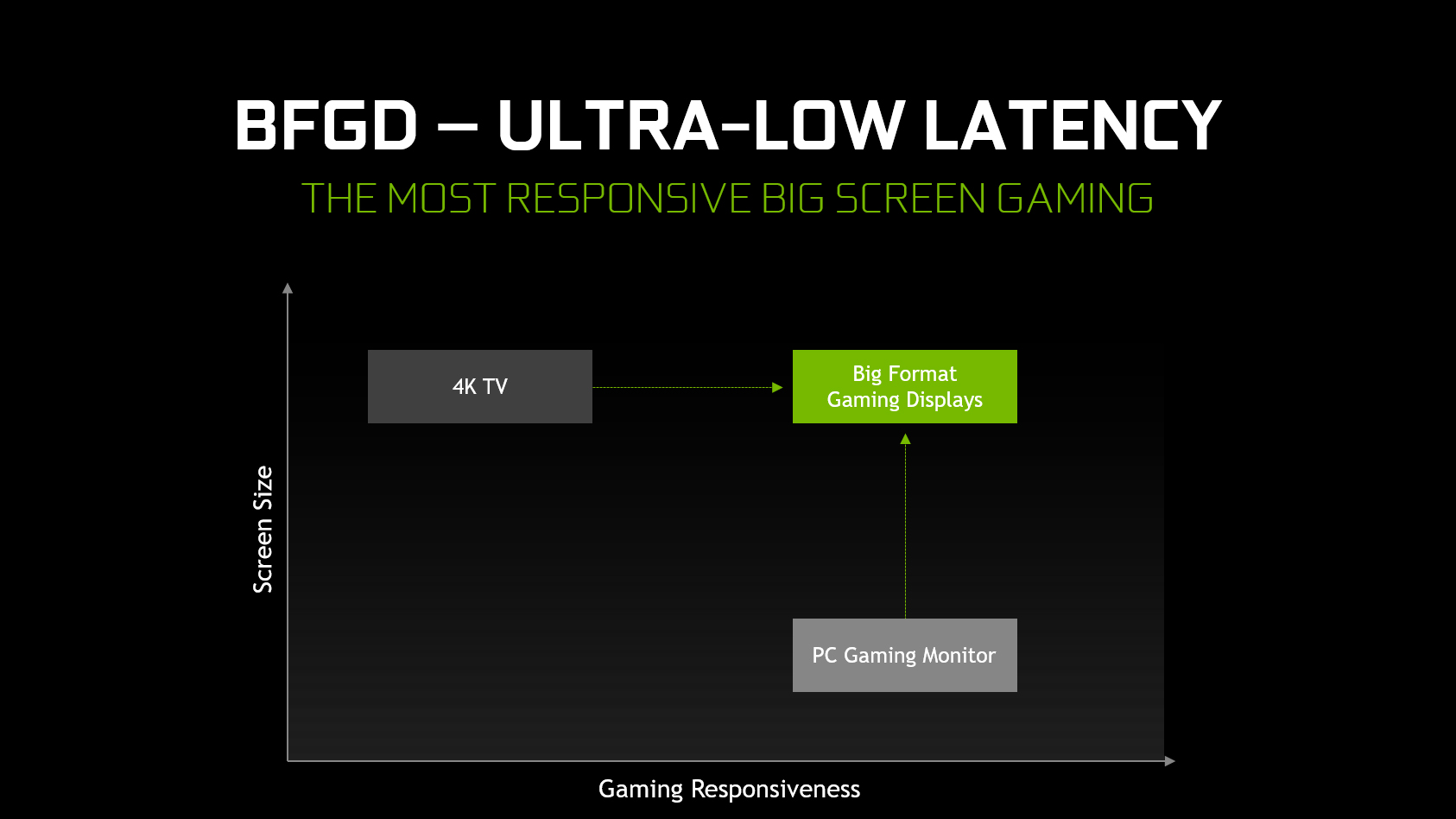

It would be tempting to dismiss the BFGDs as 65-inch monitors. They’re designed to connect over DisplayPort 1.4, instead of HDMI (though HDMI is present for audio), and the early marketing positions them as the ultimate accessory for a PC-gaming den.

That sells the BFGDs short. Yeah, they’re targeting the PC, but they also have an Nvidia Shield built in. The Shield, if you’re not familiar, is a cross between a Roku and a bare-bones Android game console. It can handle all the online streaming apps you’d expect from an entertainment box, as well as play games – both Android titles, and games available through Nvidia’s GeForce Now subscription streaming service.

Think of it as a smart TV without a TV tuner. A very smart TV. It’s not embroiled in any stupid competition between streaming services’ corporate overlords. It can play popular games without any additional hardware. And it’ll receive all the same updates as the Shield console, which should mean a steady stream of new features over the years.

A different approach to image quality

The smart features that’ll come bundled in every BFGD are far more modern than the hodgepodge interfaces that ship with many televisions, but that’s less than half of what makes them great. The real secret sauce can be found in the BFGDs’ radically different approach to image quality.

Think of it as a smart TV without a TV tuner. A very smart TV.

A typical, top-tier television from LG, Samsung, or Vizio is built to deliver maximum visual punch. It seeks to maximize contrast, serve a wide color gamut, and minimize artifacts. The results are undeniably spectacular, but there’s a downside. Modern televisions have high latency and confusing image quality settings, and can suffer unusual frame pacing problems when they’re not fed ideal content.

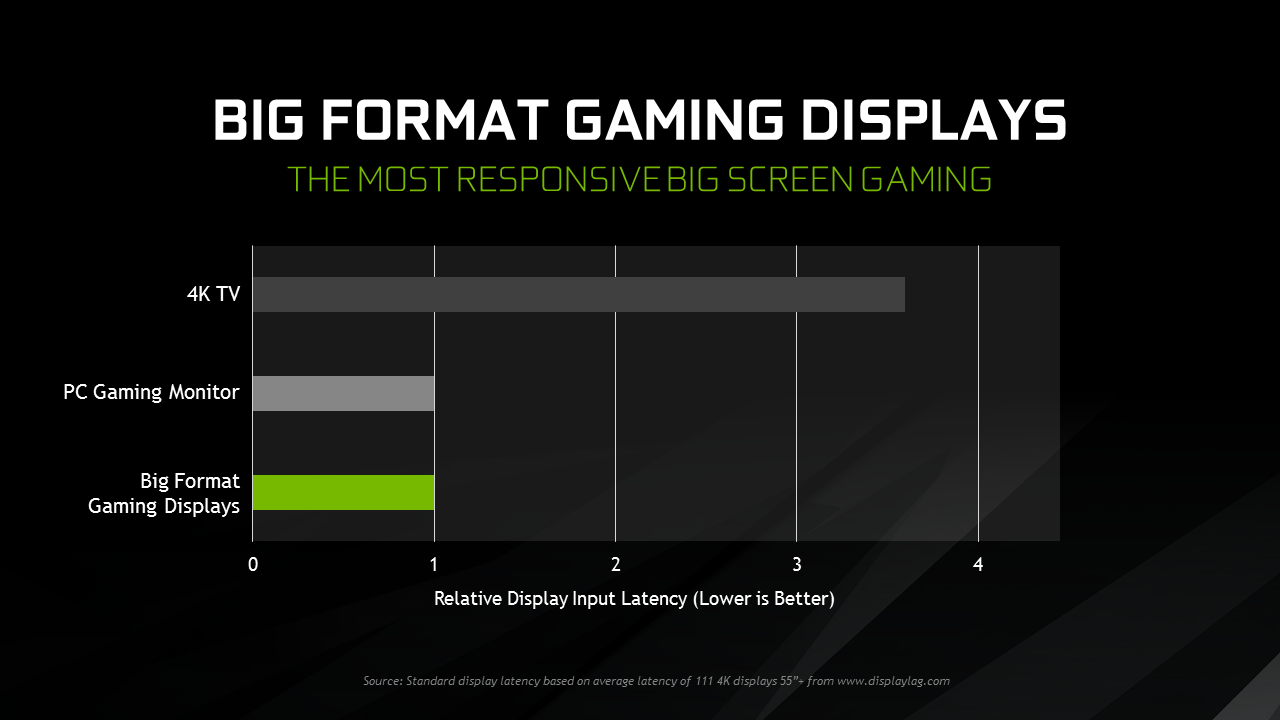

BFGDs are different. They do have HDR, 4K resolution and, according to Nvidia, are built with a panel that uses a technique similar to Samsung’s Quantum Dots. Yet they’re also fast and fluid. Every BFGD will offer at least a 120Hz refresh rate. Latency numbers aren’t being quoted yet, but Nvidia told us that even 16 milliseconds would be considered “really quite high.” LG and Samsung’s best displays can’t dip below 20 milliseconds, even when turned to game mode.

Then there’s Nvidia’s not-so-secret weapon: G-Sync. It synchronizes the refresh rate of a BFGD with the input framerate of whatever G-Sync-capable device it’s connected to, including the built-in Shield. That synchronization can occur with any content, including video. It doesn’t matter if a video was shot at 24, 60, 120, or 29.997 frames per second – it will always display smoothly, without any added stutter or lag caused by the display.

BFGDs could be a BFD

Acer, Asus, and HP are lined up to build the first BFGDs, all of them 65-inchers using the same panel. I doubt they’ll sell anywhere near the volume of modern televisions. At least, not at first. But if Nvidia and its partners can deliver on the BFGD’s promise, it won’t just be PC gamers who take notice.

You can expect to see the first BFGDs in the second half of 2018. Pricing hasn’t been announced.

Editors' Recommendations

- Nvidia clarifies how it is training its generative game AI following data concerns

- Nvidia did the unthinkable with the RTX 4080 Super

- Nvidia’s new GPUs could be right around the corner

- Nvidia may launch 3 new GPUs, and they’re bad news for AMD

- How to rewatch Nvidia’s CES 2023 keynote today